List of notes

-

Hands on SQL introduction/ tutorial

Orginally created by Darrell Aucoin in conjuection with the Stats Club, find the original here.

Installation of SQLite Browser

Windows

Download and install the file below

Mac

Download and install the package below

Ubuntu and Linux Mint

In terminal execute the following command

sudo apt-get install sqlitebrowserGet the material

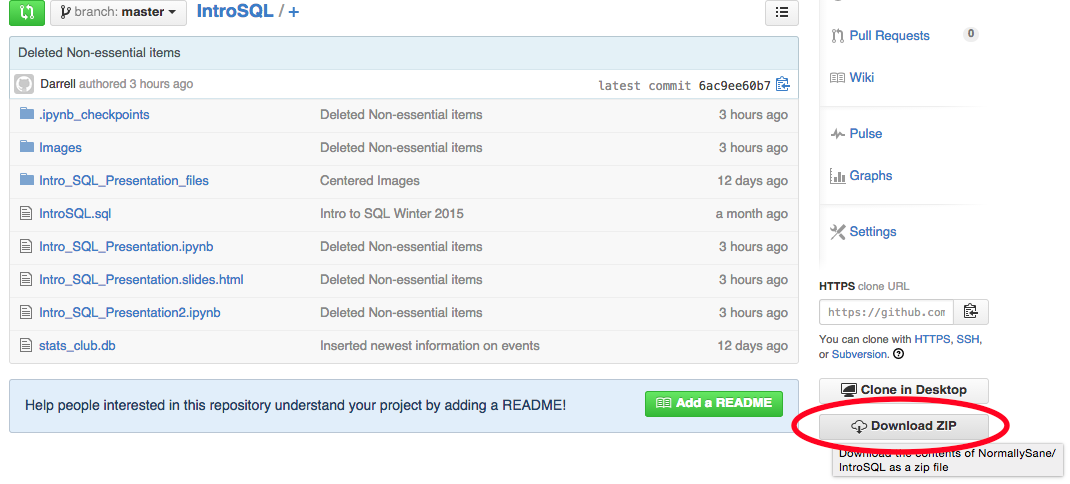

- Go to https://github.com/NormallySane/IntroSQL

- Download zip

file

- Unzip downloaded file in your preferred directory

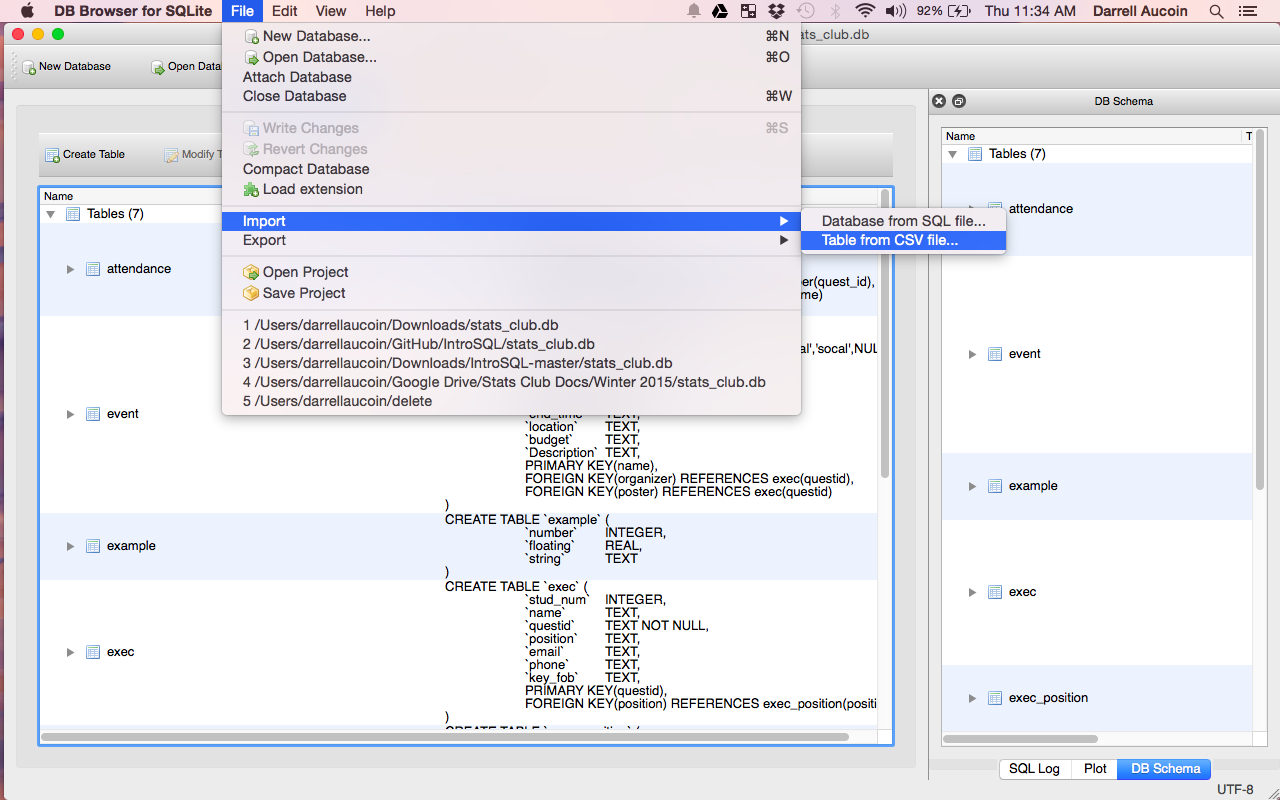

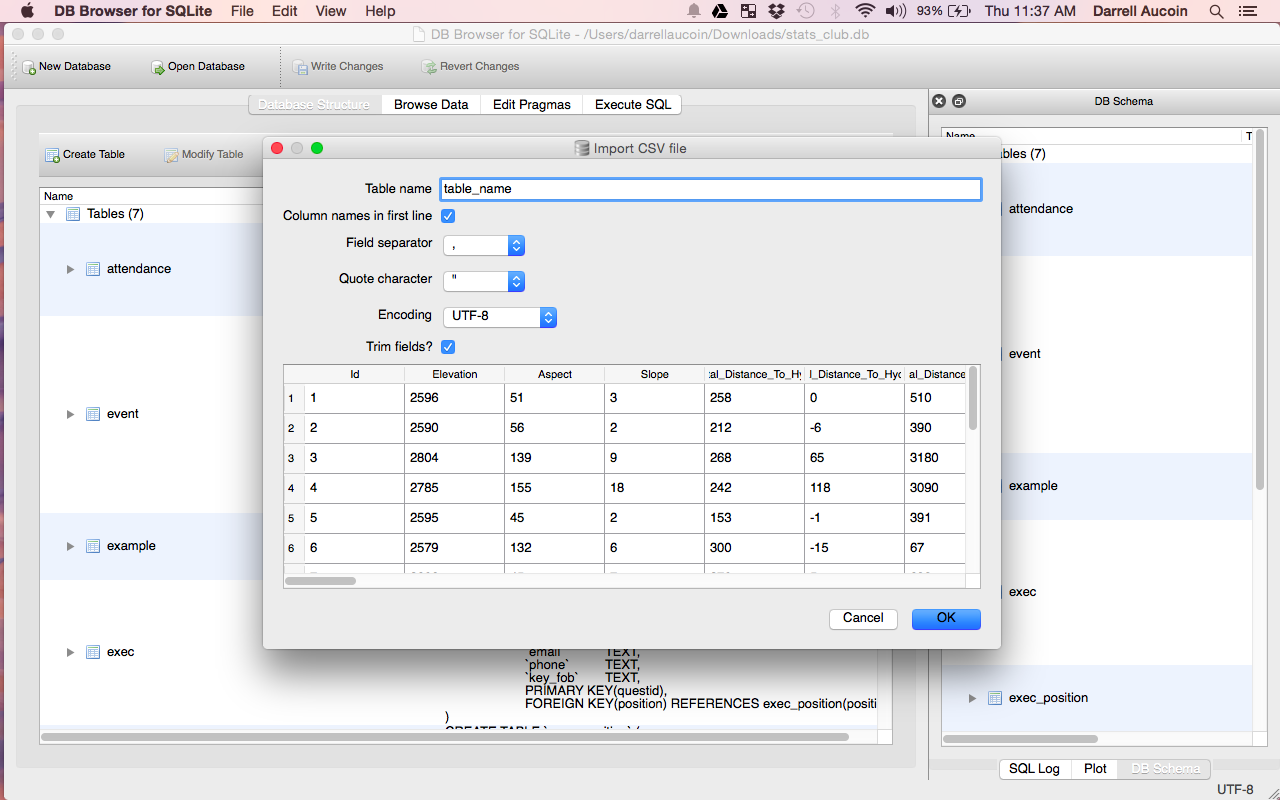

Using SQLite Browser

- Open SQLite browser

- Open

stats_club.dbdatabase file in the downloaded directory - Click on ‘Execute SQL’ tab

- Open SQL file

IntroSQL_Presentation1.sqlfile in the downloaded directory,IntroSQL_Presentation2.sqlfor second presentation. - Follow along with file, executing statement as topics dictate

- Open SQL file

- Content of the talk is on https://github.com/NormallySane/IntroSQL/wiki (open in your favorite browser)

- SQLite browser is a great tool for learning SQLite and SQL in general

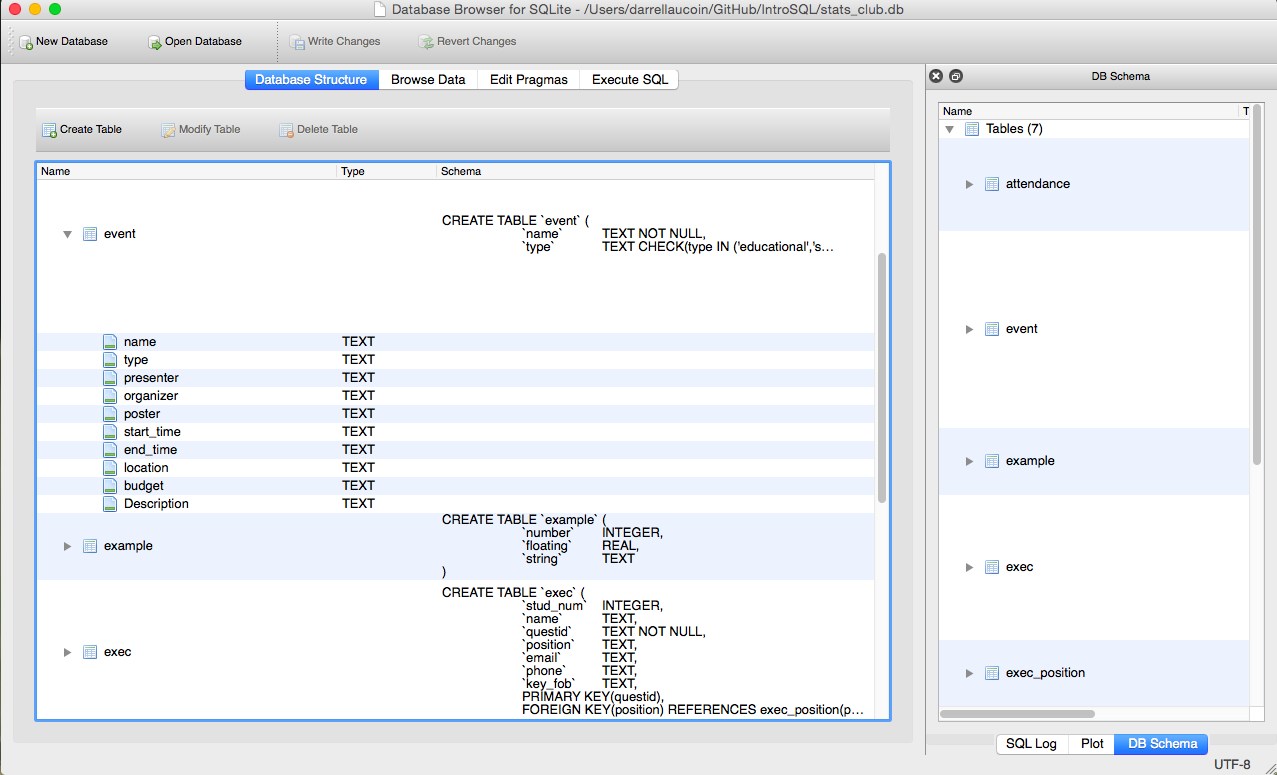

Features of SQLite Browser

- Lets you see the data structure of tables in the database

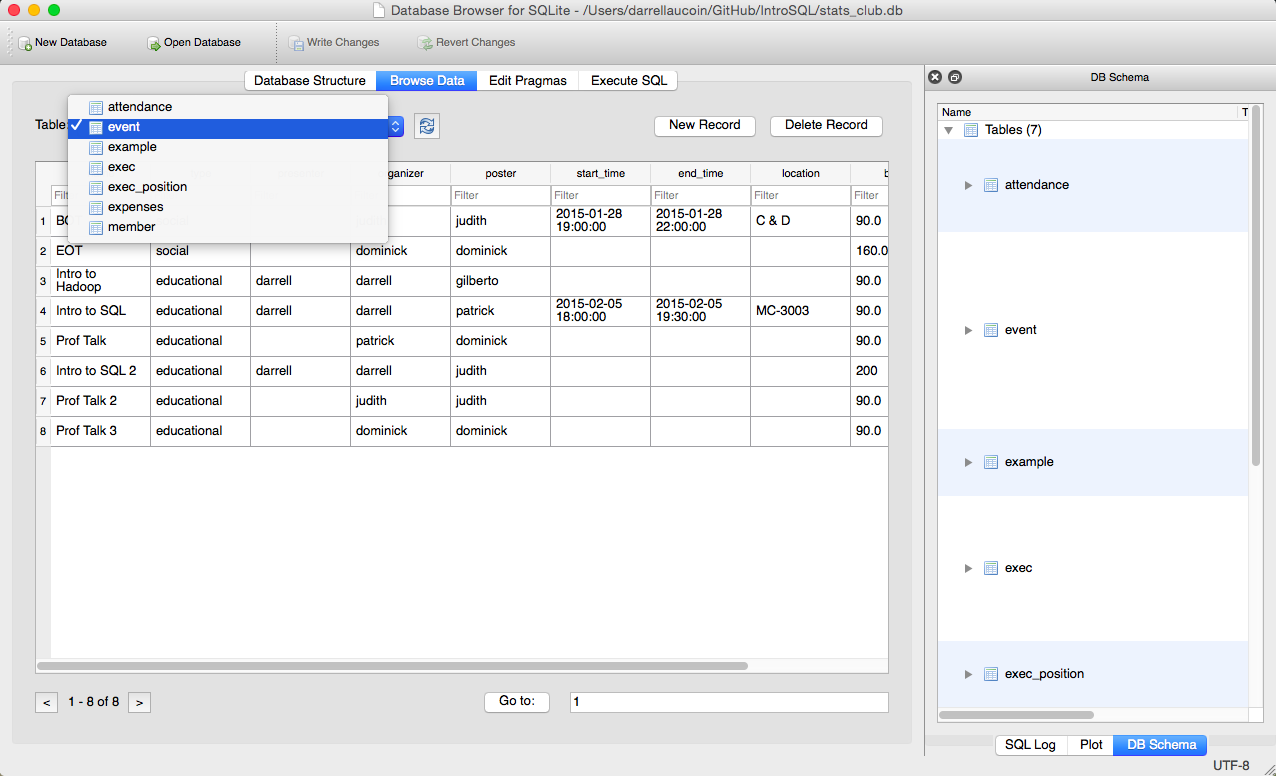

- Explore the data entered in tables

- Execute SQL statements and see results

- Easily construction tables from files

- If the table is already created it will just import the data into the table, otherwise it will create a new table

- SQLite functionality can also be extended by various user created extensions

Motivation

Q: Why learn a database lanugage?

- Data is rarely tidy as they are in many stats courses

- Most of the time the data we want (outside of assignments) is in a database

Q: Why learn SQL?

- One of the most popular data analysis tools (O’Reilly Data Scientist Survey for 2014)

- One of the most in-demand skills for 2014 (Workopolis)

- SQL not only can retrieve data, but can slice, dice and transform the data as needed

Q: What is SQL?

- A programming language allowing multiple concurrent users storing, manipulating, and querying data stored in a relational database.

- Data does not necessary have to fit into memory.

Q: Why use SQLite?

- Very easy to install

- Easy to share (database is a single file)

- Good SQL language to practice with (can easily use it to preprocess data for kaggle competitions)

Different Implementations of SQL

MySQL: Highly popular open source SQL implementation.

PostgreSQL: Open source SQL designed around letting users create User Defined Functions (UDF).

SQLite: Open sources light weight SQL usually used as an embedded database for applications (web browsers, mobile applications, etc.), or light to medium traffic websites. Database is saved as a single file and only allows one writer at a time. Various OS’s have SQLite preinstalled (type

sqlite3in terminal for mac)Oracle: SQL implementation produced and marketed by Oracle Corporation.

Microsoft SQL Server: SQL implementation developed by Microsoft.

DB2: SQL developed by IBM. The database used by University of Waterloo.

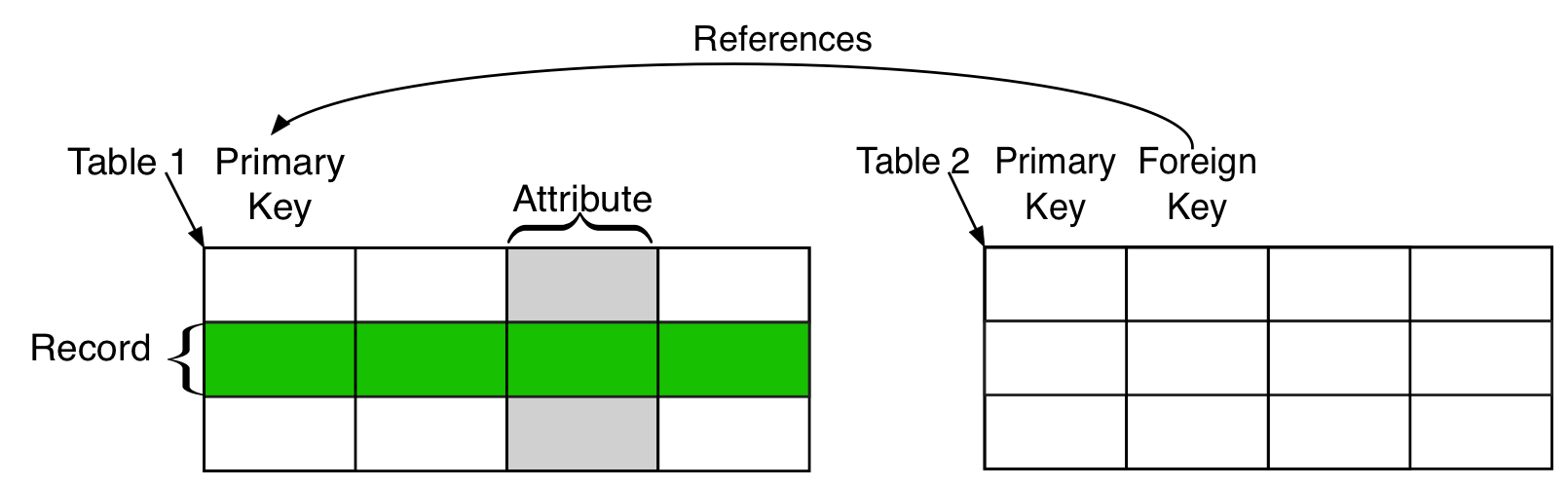

Relational Databases

Relational Database: A relational database is a system organized in tables containing records (rows) related to other records in other tables. Each entity having attributes (columns also called fields) which give additional information on an entity.

Field, Attribute (Column): An individual piece of data stored in a table.

Record (Row): A tuple of columns describing an entity or action of an entity.

Table: A collection of rows. Usually in reference a persistent table saved permanently to memory

Result set: A non-persistent table, usually the result of a query.

(Virtual) View: A named query saved into memory and performed whenever it is named. Some SQL servers have materialized views that permanently save the data for faster access.

Subquery: A query that returns a table to another query.

Primary key: A tuple of columns that uniquely define each row in a table.

Foreign key: A tuple of columns identifying a relationship to another table.

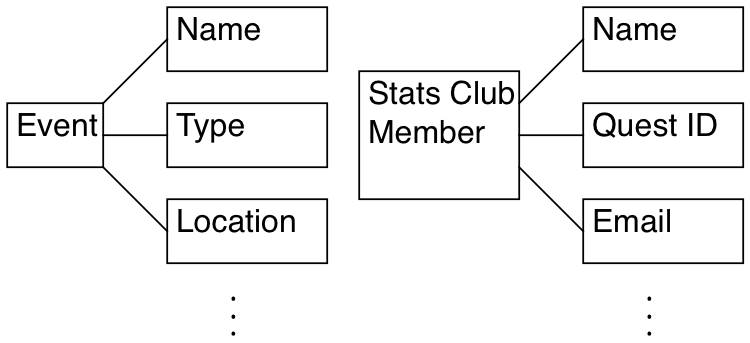

The E-R Model

The E-R (entity-relationship) Model is an analysis tool for relational databases. E refers to entity: An object R refers to relationship: As in how objects relate to each other.

Each entity has properties associated with every instance of an object.

Objects are related to each other through relationships:

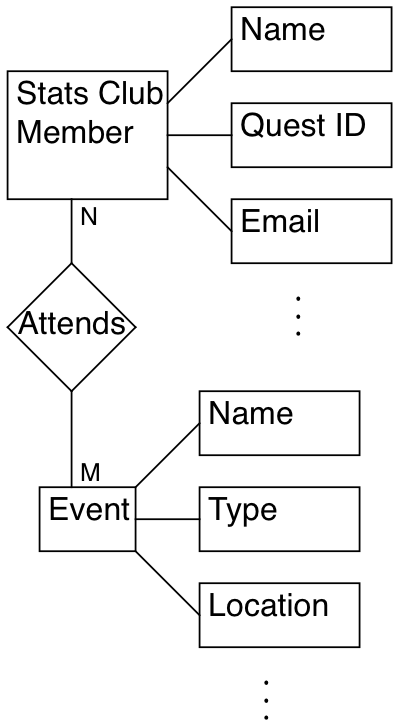

E-R Relationships

- One to one relationships: For every instance of object A, there are

exactly one instance of object B.

- Every student has exactly one quest account

- One to many relationships: For every instance of object A, there are an

indeterminate number of instances of object B.

- A single course section has many students

- Many to one relationships: For many instances of object A, there are a

single instance of object B.

- Many students are enrolled in a course section

- Many to many relationships: For many instances of object A, there are a

many possible instances of object B.

- Many students take many courses

Normalization

Database Normalization is a process in relational databases to minimize redundacy. Data can be constructed so that additions, deletions, and modifications can be made in just one table.

Data goes through three forms until it is fully utilizied by a relational database:

- First Normalized Form: Each attribute contains only atomic values and only a single value from that domain. i.e. The data is in the form of a table with no merged columns or rows.

- Second Normalized Form: Each attribute in the table is dependent on the entity it is describing (dependent on the primary key).

- Third Normalized Form: All non-primary key attributes are not determined by any other non-primary key attributes.

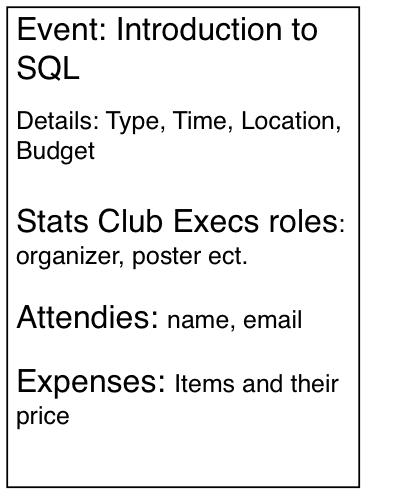

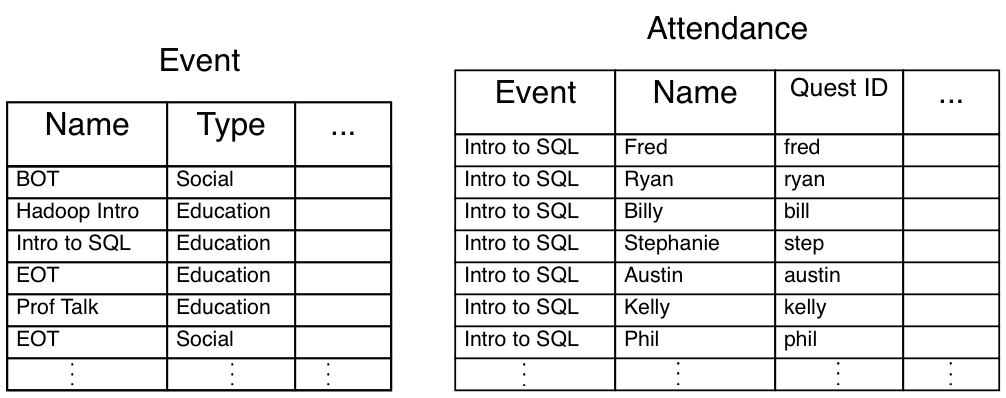

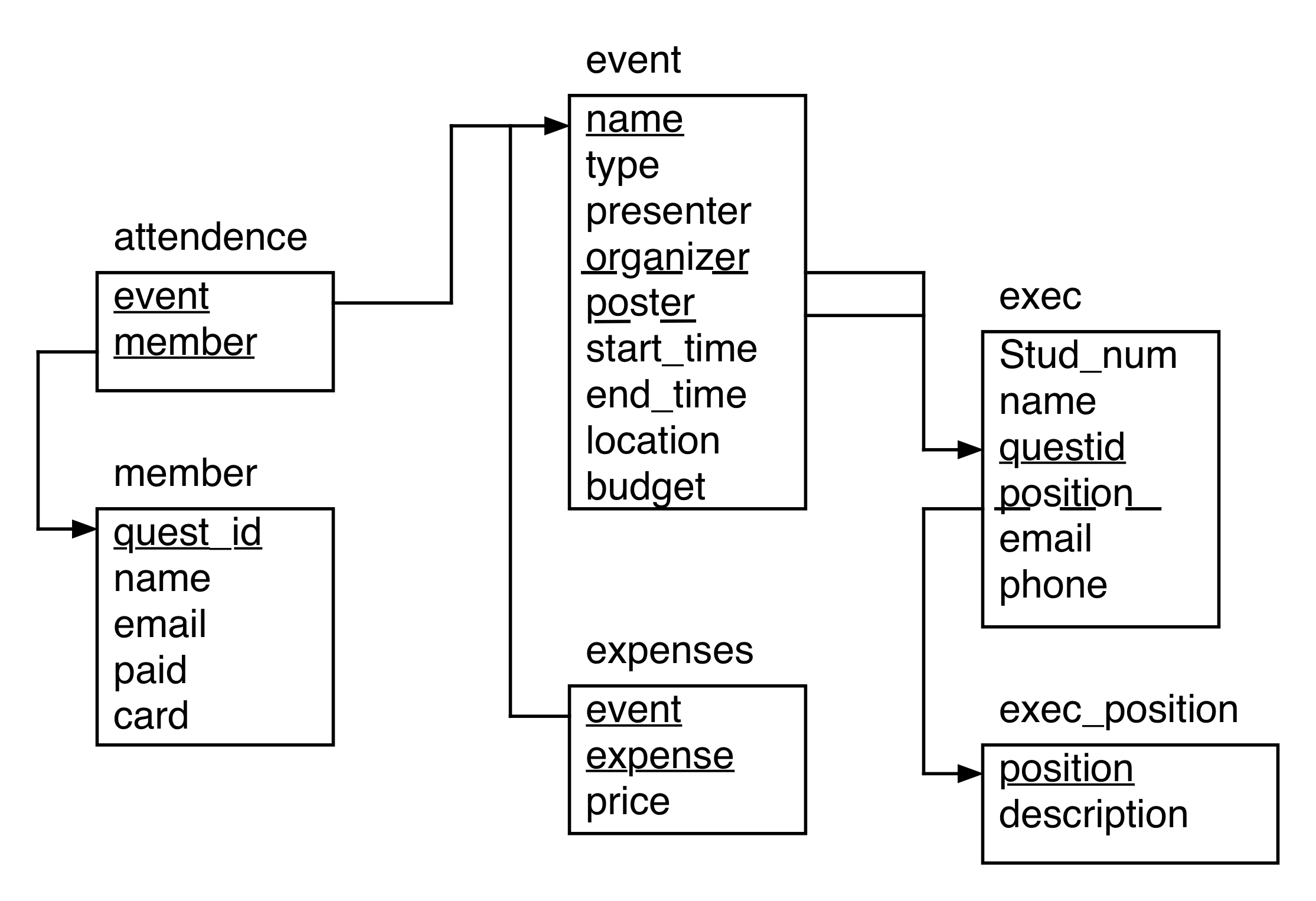

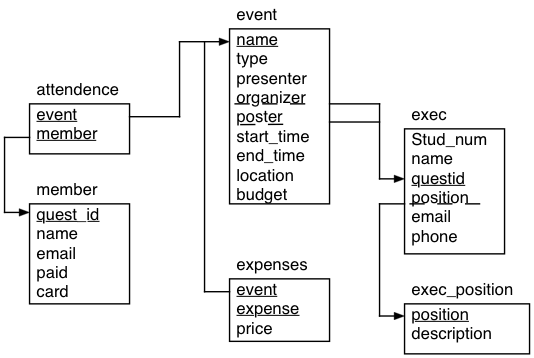

Example: For each event for Stats Club we have: 1. a list of attendies along with some basic information (email, ect.), 2. the event’s name, type (social or education), the roles for Stats Club execs for the event, time and location of the event, and a budget, 3. as well as a list of expenses for the event

A report of the event would look something like:

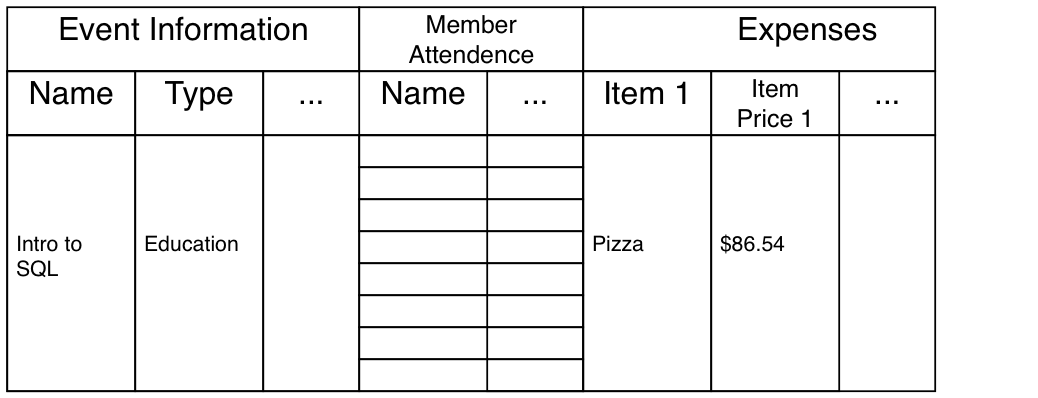

We first need to tabulate the data:

However this is not enough, the data is not in a form that can be easily recognized by computers. - How do you add new events? - What about members that attend multiple events? - that attend no events?

1st Normalization

First Normalized Form: Each attribute contains only atomic values and only a single value from that domain

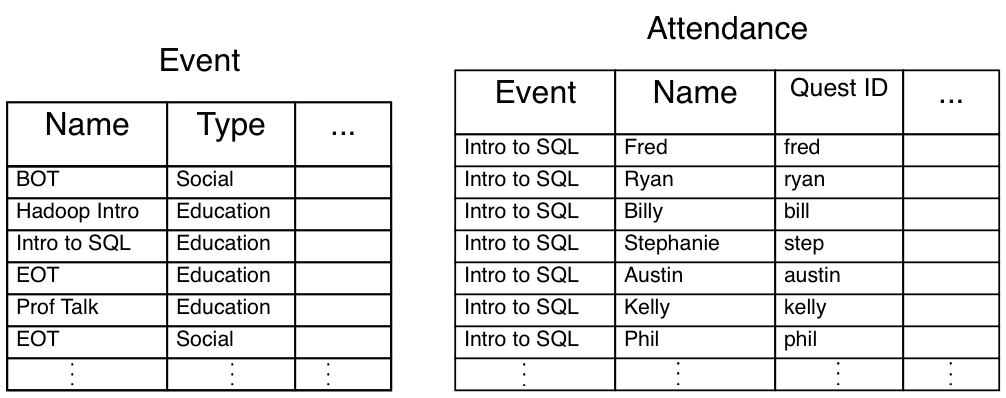

Example: To bring the data into 1st normalized form we need to break the table into two tables: Event, and Expenses:

- This contains all of the information before but is more organized for the computers to deal with

- Still not enough, a mispelling of an event or type could make the database think there is a new event

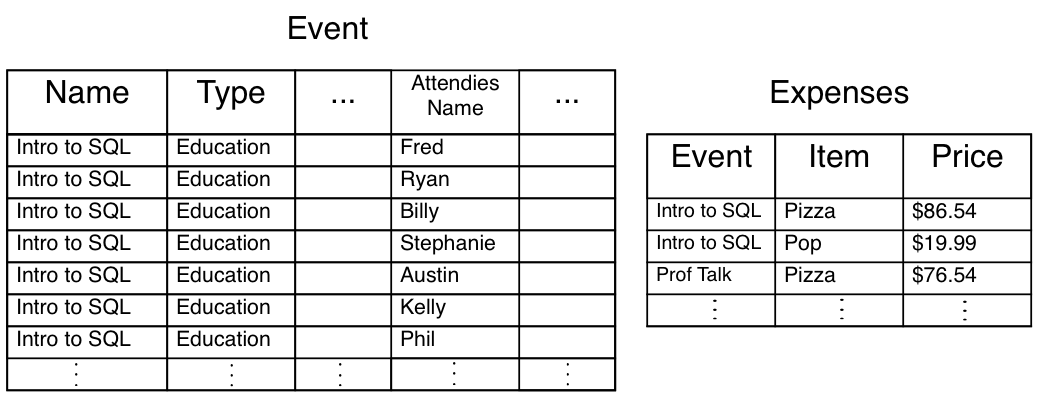

2nd Normalization

Second Normalized Form: Each attribute in the table is dependent on the entity it is describing (dependent on the primary key).

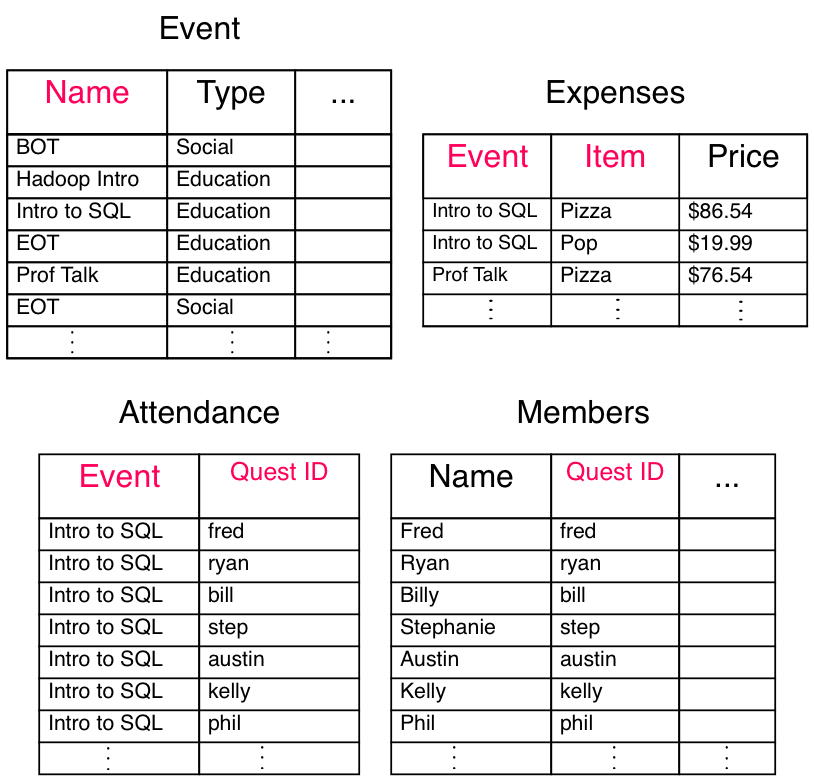

Example: To bring the data into 2nd normalized form, we need to break the Event table again. Let’s break the table so we get important description of the events (name, type, presenter, organizer, etc.) and a list of members that attended each event.

- Attendance is 2nd normalized form if we consider the primary key as the tuple of event and quest ID.

- Attendance still has redundant information, several members can attend multiple events or none at all

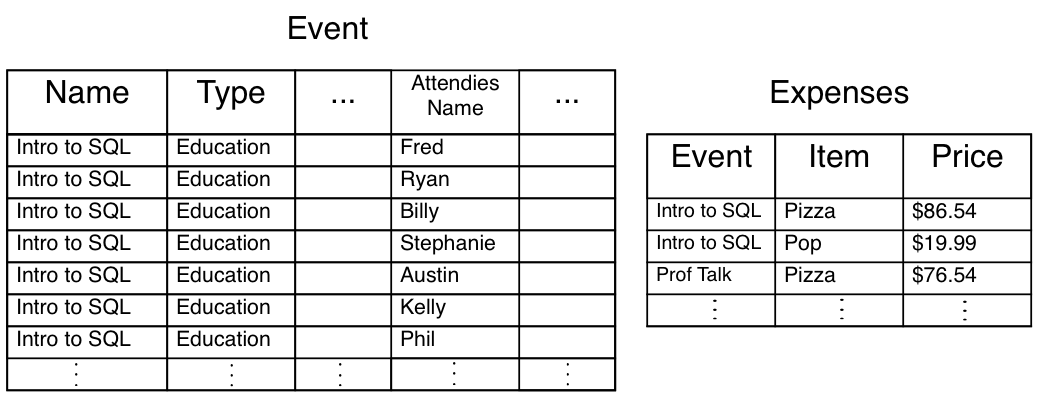

3rd Normalization

Third Normalized Form: All non-primary key attributes are not determined by any other non-primary key attributes.

Example: The information on each member (name, email, etc.) is not determined by the event. We need to break the attendance table to bring into 3rd normalized form: attendance and members.

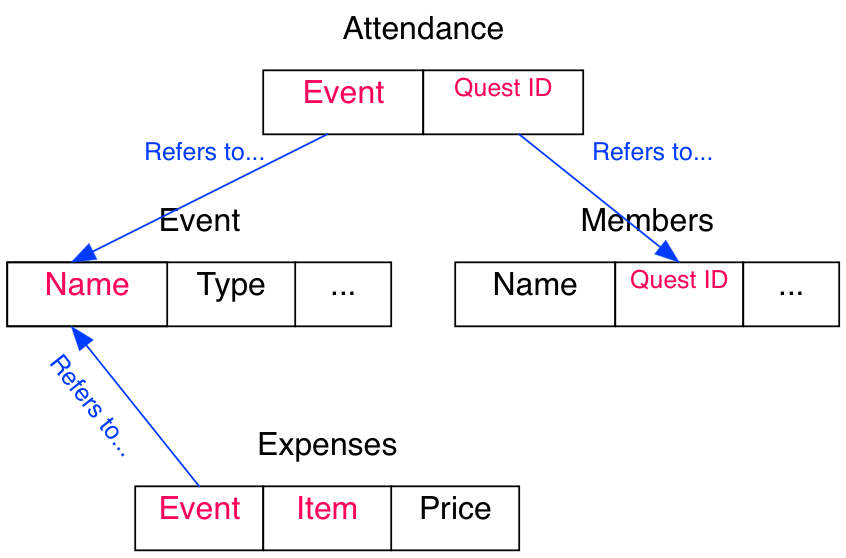

Primary Keys and Foriegn Keys

We can reconstruct the orignal table by joining tables, foreign keys with what they reference (primary keys). - We can only construct an instance of a foreign keys if their is already an instance of their reference - i.e. the set of foreign keys for one table is a subset of the primary keys they reference

Primary key: A tuple of columns that uniquely define each row in a table. (Red items below) Foreign key: A tuple of columns identifying a relationship to another table. (Blue items below)

Quiz: Normalization

Normalize the following table:

Student Name Quest ID Course Description Section Solutions are here

Relational Algebra Operations

Projection: Returns a subset of columns.

Selection: Returns only entities where some condition is true.

Rename: Rename an attribute.

Natural Join: Tuples from one table is joined to tuples from another table based on common attributes (at least one column with the same name and possible values is common between them)

Θ__-Join and Equijoin:__ Join tuples from two different tables where some binary condition (Θ = {≥, ≤, >, <, =}) between two tables attributes is true. When Θ is =, the join is called an equijoin.

Set Operations: Set theory’s unions, set difference, and cartesian product of tuples performed on tuples of different tables.

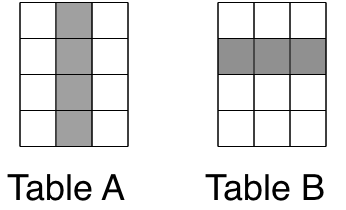

Quiz: Relational Algebra

Q1: What kind of operation is performed on the following tables?

Q2: What kinds of joins can peform on tables?

Solutions are here

Constraints

Constraints limit what can be entered into fields in a table and help ensure encapsulation:

PRIMARY KEY constraints Uniquely identifies each record in a table (quickly referencing it)

FOREIGN KEY constraints Points to a PRIMARY KEY of another table, enabling them to easily join them

CHECK constraints Limits the range of values that a field can take.

UNIQUE constraints Enforces uniqueness on an field (column).

NOT NULL constraints Enforces a field to always contain a value.

- We can also create indexes for fields making them easily searchable

SQL Language

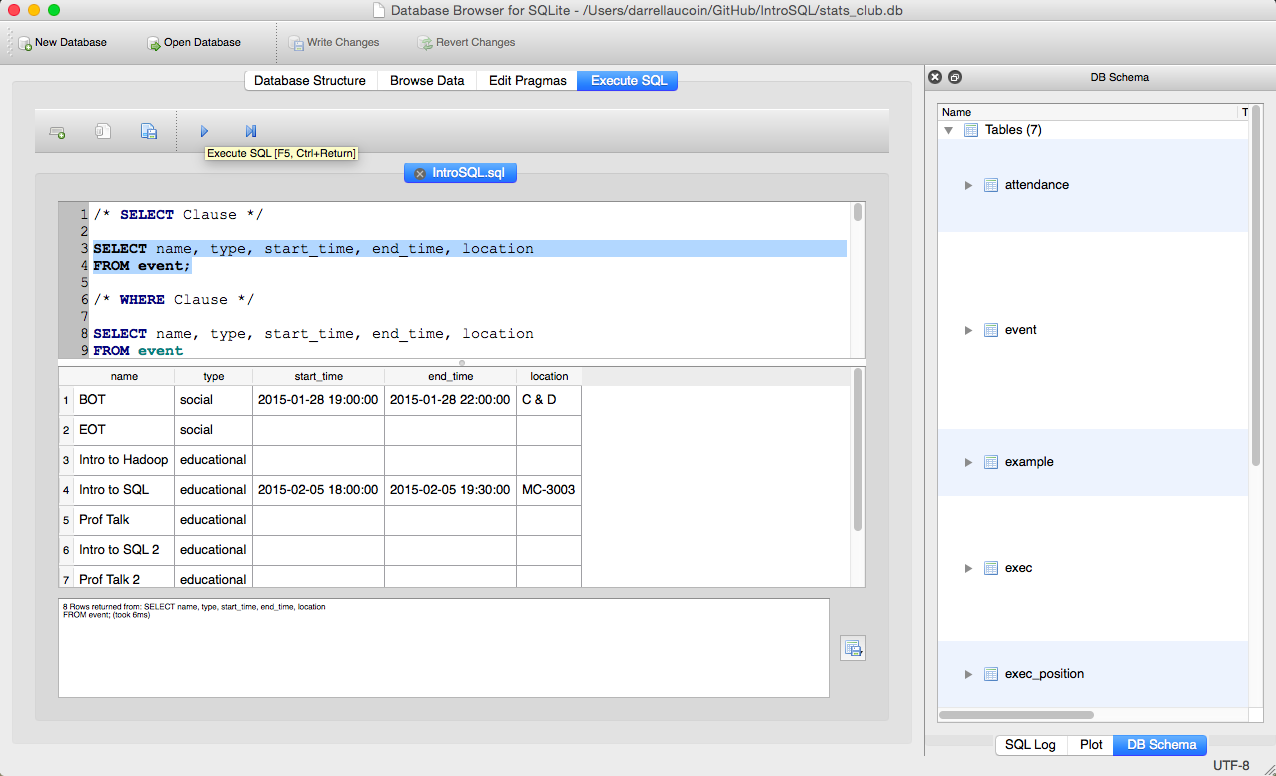

- Case does not matter, for presentation purposes UPPER CASE is used for SQL key words

-

SQL statements are processed as a whole (ignoring white space and new lines) and ends with a ‘;’

- We will be used a database based on Stats Club, supplied with fake data:

Data Types

In SQLite3, there are 5 basic data types:

-

INTEGER The value is a signed integer.

-

REAL The value is a floating point value, stored as an 8-byte IEEE floating point number.

-

TEXT The value is a text string.

-

BLOB The value is a blob of data, stored exactly as it was input.

-

NUMERIC May contain integer or real values. The data is stored as text but converted as necessary.

SQL General Data Types

- Different implementations of SQL uses different datatypes but there are some general commonalities:

Data type Description CHAR(n) Character string. Fixed length n VARCHAR(n) Character string. Variable length <= n BINARY(n) Binary string. Fixed length n BOOLEAN TRUE or FALSE values VARBINARY(n) Binary string. Variable length <= n INTEGER(p) Integer numerical (no decimal). Precision p SMALLINT Integer numerical (no decimal). Precision 5 INTEGER Integer numerical (no decimal). Precision 10 BIGINT Integer numerical (no decimal). Precision 19 DECIMAL(p,s) Exact numerical. precision p, scale s NUMERIC(p,s) Exact numerical. precision p, scale s FLOAT(p) Floating point number. mantissa precision p REAL Approximate numerical. Mantissa percision 7 FLOAT Approximate numerical. Mantissa percision 16 DATE Stores year, month, and day values TIME Stores hour, minute, and second values TIMESTAMP Stores year, month, day, hour, minute, and second values NULL Values

NULL A NULL can be thought of as an unknown value. - Any datatype can have NULL values - if either x or y is NULL:

x+y => NULL x>y => NULL SQL uses a three-value logic system: TRUE, FALSE, NULL:

AND TRUE FALSE NULL TRUE T F NULL FALSE F F F NULL NULL F NULL OR TRUE FALSE NULL TRUE T T T FALSE T F NULL NULL T NULL NULL NOT TRUE FALSE NULL F T NULL Projection (SELECT Clause)

SELECT Statement: The SELECT statement returns a table of values (sometimes empty) as specified in the statement.

SELECT Clause: The SELECT clause of a SELECT statement specifies the columns for the result set.

SELECT col1, col2, ... FROM table_name;- We can also specify all columns from a table

SELECT * FROM table_name;- If we leave out ‘

FROM table’ we are effectively taking from an empty table

In SELECT clauses, we can specify more than just columns from a table: Literials: Strings, numbers that are repeated for every row Expressions: Expressions of columns/literals Functions: Built in functions in SQL (ROUND(), etc.) User Defined Functions: Functions that a user can create within SQL to run

Example: Let’s try something simple: some literials and a calculation of

1 / 2SELECT 1, 2, 'this is a string', 1/2;1 2 'this is a string' 1/2 0 1 2 this is a string 0 Q: What happened here?

A: Since 1 and 2 are both integers, the expression 1/2 returns an integer. To get 0.5, we need to use a real number (a FLOAT).

SELECT 1, 2, 'this is a string', 1/2, 4/2, 5/2, 1/2., 1./2, 1/CAST(2 AS FLOAT);1 2 'this is a string' 1/2 4/2 5/2 1/2. 1./2 1/CAST(2 AS FLOAT) 0 1 2 this is a string 0 2 2 0.5 0.5 0.5 SQL statements ignore white spaces and new lines, the statement is only processed when after it sees ‘;’

Example: The following two statements produce the same table.

SELECT 1, 2, 'this is a string', 1/2;1 2 'this is a string' 1/2 0 1 2 this is a string 0 SELECT 1, 2, 'this is a string', 1/2;1 2 'this is a string' 1/2 0 1 2 this is a string 0 SQL Functions

There are many functions in SQLite and each implementation of SQL have different functions. Here are some random functions in SQLite:

Function Description ABS(col) Absolute value of numeric column LENGTH(col) Return length of string column LOWER(col) Return the string column in lower case UPPER(col) Return the string column in upper case RANDOM() A pseudo-random integer between -9223372036854775808 and +9223372036854775807 SELECT ABS(-8), LENGTH('This is a String'), LOWER('ThIS Is A StRiNg'), RANDOM();ABS(-8) LENGTH('This is a String') LOWER('ThIS Is A StRiNg') RANDOM() 0 8 16 this is a string 1085249690759383083 There are many more core functions within SQLite.

Quiz: SELECT Clause

Calculate the average of 2 random numbers.

Q: What is the upper and lower case for the string ‘UPPER or lower’?

Solutions are here

FROM Clause

FROM Clause: Specifies the table: either a persistant table, or a result set: a join of two or more tables or a subquery or some combination of the two.

SELECT col1, col2, ... FROM table_name;Example: What are the names, type, times and locations for Stats Club?

SELECT name, type, start_time, end_time, location FROM event;name type start_time end_time location 0 BOT social 2015-01-28 19:00:00 2015-01-28 22:00:00 C & D 1 EOT social None None None 2 Intro to Hadoop educational None None None 3 Intro to SQL educational 2015-02-05 18:00:00 2015-02-05 19:30:00 MC-3003 4 Prof Talk educational None None None 5 Intro to SQL 2 educational None None None 6 Prof Talk 2 educational None None None 7 Prof Talk 3 educational None None None Quiz: FROM Clause

Q: Who are the execs for Stats Club, and what are their positions, and emails? - Projection of name, position, and email from the table exec

Solutions are here

Aggregate Functions

Aggregate Functions: Takes in the columns of a table and aggregates over the entries.

Function Return value AVG(column) Average of non-null values COUNT(column) Count of non-null values MAX(column) Maximum of values MIN(column) Minimum of values SUM(column) Sum of values GROUP_CONCAT(column) Concatenation of column strings - There are more aggregate functions for other implementations of SQL

More detailed descriptions of the aggregate functions within SQLite can be found here.

SELECT COUNT( 12 ), COUNT('ssdf'), COUNT(NULL), SUM(23), SUM(0), SUM(NULL), AVG(0), AVG(NULL);COUNT( 12 ) COUNT('ssdf') COUNT(NULL) SUM(23) SUM(0) SUM(NULL) AVG(0) AVG(NULL) 0 1 1 0 23 0 None 0 None Lets work with some aggregate functions with the table

examplebelow:number floating string 0 1 23.23 this 1 3232 -21.23 is 2 11 -2.00 a 3 -23 54.00 string 4 2 NaN concatenated SELECT COUNT(*), COUNT(string), COUNT(floating), AVG(number), SUM(number), GROUP_CONCAT(string, ' ') FROM example;COUNT(*) COUNT(string) COUNT(floating) AVG(number) SUM(number) GROUP_CONCAT(string, ' ') 0 5 5 4 644.6 3223 this is a string concatenated DISTINCT Prefix

In the SELECT clause we can specify to return only distinct tuples of columns

SELECT DISTINCT col1, col2, ... FROM table_name;- We can also use DISTINCT within aggregate functions making them only aggregate over distinct entries

SELECT aggregate_function(DISTINCT column_name) FROM table_name;Example: What events have members attended? - What are the distinct events where at least one member attended?

SELECT DISTINCT event FROM attendance;event 0 Intro to SQL 1 BOT 2 EOT 3 Intro to Hadoop 4 Intro to SQL 2 5 Prof Talk 6 Prof Talk 2 Quiz: DISTINCT

Q: What are the distinct majors of Stats Club members?

Q: How many distinct majors of Stats Club members are there?

- DISTINCT can be within aggregate functions

Solutions are here

Alias

Column Alias

To increase the readability of SQL as well as the result set, we can give columns new names:

SELECT col AS new_name FROM table_name;- Column aliases make the final table more readiable and workiable for subqueries

Table Alias

We can also give tables new names as well:

SELECT table_alias_1.col_name, table_alias_2.col_name, ... FROM table_1 AS table_alias_1, table_2 AS table_alias_2;- Very useful when tables have common column names

Note: We can reference what table a column is coming from by a ‘.’

table_name.column_nameExample: If we give column aliases for the previous table, we make the result more interpretiable:

SELECT COUNT(*) AS num_rows, COUNT(string) AS num_strings, COUNT(floating) AS num_float, AVG(number) AS avg_integer, SUM(number) AS sum_int, GROUP_CONCAT(string, ' ') AS cat_string FROM example;num_rows num_strings num_float avg_integer sum_int cat_string 0 5 5 4 644.6 3223 this is a string concatenated Quiz: Aliases

Perform a query using a table alias, and use this table alias when referencing the column i.e.

table_alias.column_name- This will be really important for bigger, more complicated queriesSolutions are here

Selection (WHERE Clause)

WHERE clauses filters the result set, removing rows where the condition returns either FALSE or NULL.

SELECT col1, col2, ... FROM table_name WHERE condition;Example: What Stats Club events are social?

SELECT name, type, start_time, end_time, location FROM event WHERE type = 'social';name type start_time end_time location 0 BOT social 2015-01-28 19:00:00 2015-01-28 22:00:00 C & D 1 EOT social None None None Prediate Operators

Operator Description Example = Equal to WHERE gender = ‘M’ <>, != Not equal to WHERE gender <> ‘M’ > Greater than WHERE num > 5 < Less than WHERE num < 5 >= Greater than or equal to WHERE num >= 5 <= Less than or equal to WHERE num <= 5 IS NULL Value is NULL WHERE num IS NULL IS NOT NULL Value is not NULL WHERE num IS NOT NULL BETWEEN Between a range WHERE num BETWEEN 3 AND 5 IN In a list of values WHERE num IN (3, 5, 8) LIKE Pattern Search WHERE str LIKE ‘F%’ EXISTS Subquery have any rows? WHERE EXISTS (subquery) LIKE Predicate

LIKE predicates

‘_’ means a character of any type ‘%’ means between 0 or more characters of any type

Example: What Stats Club members has a name begining with F?

SELECT * FROM member WHERE name LIKE 'F%';quest_id name email faculty major paid card 0 fred Fred E. Finch fred@uwaterloo.ca Math Pure Math Y Y 1 frances Frances A. Miller frances@uwaterloo.ca Math Stats Y Y Operator Modifiers

ALL, ANY or SOME Operator Modifiers

The operators =, <>, !=, >, <, >=, <= can be used with a list of values and the operators

ALLorANY / SOME.- SQLite does NOT have

ALLorANY / SOMEimplemented

ANY, SOME Operator returns true, if operator is true for any value in the set.

ALL Operator returns true, if operator is true for all values in the set.

SELECT * FROM table_name WHERE column_name < ALL (subquery_returning_one_column);Example: What are the expenses for non-social events? (

ALL,ANY, andSOMEare not implmented in SQLite)SELECT * FROM expenses WHERE event != ALL (SELECT name FROM event WHERE type = 'social');event expense price 0 Intro to SQL pizza 87.43 1 Intro to SQL pop 15.34 2 Intro to Hadoop coffee 23.12 3 Intro to Hadoop water 10.23 4 Intro to Hadoop donuts 53.23 5 Intro to Hadoop cookies 10.23 6 Intro to SQL: Basic Queries cookies 10.23 7 Intro to SQL: Basic Queries donuts 20.34 8 Intro to SQL: Basic Queries pop 21.54 9 Intro to SQL: Basic Queries water 10.52 10 Prof Talk pop 20.31 11 Prof Talk pizza 62.56 12 Prof Talk 2 pizza 61.56 13 Prof Talk 2 pop 15.65 14 Prof Talk 3 pizza 62.45 15 Prof Talk 3 pop 13.23 16 Intro to SQL: Advanced Queries cookies 10.23 17 Intro to SQL: Advanced Queries donuts 20.34 18 Intro to SQL: Advanced Queries pop 21.54 19 Intro to SQL: Advanced Queries water 10.52 AND, OR Operators

A group of filter conditions can be linked together with AND or OR operators.

SELECT col1, col2, ... FROM table_name WHERE (condition1 AND condition2 ) OR condition3;Example: What Stats Club members has a name with it’s second letter as A or ends in B?

SELECT name FROM member WHERE name LIKE '_a%' OR name LIKE '%b';name 0 Darrell Aucoin 1 James M. Eddings 2 James A. Foxt 3 Daniel J. Moore 4 Nancy P. Jackson 5 Ralph L. Waldrop 6 Tameika M. McMaster 7 Janelle T. Smith 8 Ruben Lamb 9 Patrick Robertson Quiz: Filtering (WHERE Clause)

Q: What events for Stats Club are introductory talks? - Introductory talk names start with ‘Intro’

Q: What Stats Club members have their first name starting with a letter BETWEEN A and G?

Solutions are here

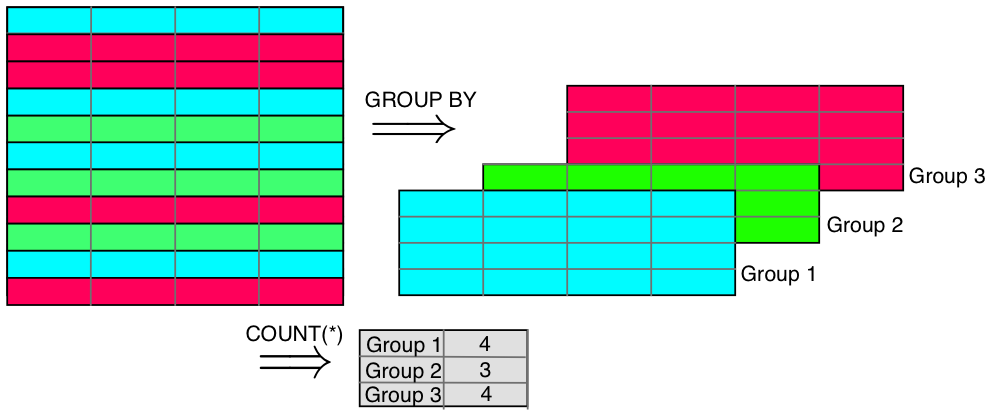

GROUP BY Clause

- GROUP BY clause groups the table by a column (or tuple of columns) and applies

a function to each group

- In the SELECT portion of the statement, you can only list the grouped column(s) and aggregate functions on them.

SELECT col1, col2, ..., aggregate_function FROM table_name GROUP BY col1, col2, ...;Aggregate Functions

Recall: Aggregate Functions: Takes in the columns of a table and aggregates over the entries. - If we use a GROUP BY clause, the aggregation will be over those groups

Function Return value AVG(column) Average of non-null values COUNT(column) Count of non-null values MAX(column) Maximum of values MIN(column) Minimum of values SUM(column) Sum of values GROUP_CONCAT(column) Concatenation of column strings - There are more aggregate functions for other implementations of SQL

Example: What are the number of each type of event for Stats Club?

SELECT type, COUNT(*) AS num_events FROM event GROUP BY type;type num_events 0 educational 6 1 social 2 HAVING Clause

HAVING clauses are very similar to WHERE clauses but can have aggregate function in their conditions.

You can have a WHERE and HAVING clause in the same statement.

Example: How many Stats Club members are in each major where the major has at least 2 members?

SELECT faculty, major, COUNT(*) FROM member GROUP BY faculty, major HAVING COUNT(*) >= 2;faculty major COUNT(*) 0 Math Act Sci 10 1 Math Applied Math 2 2 Math C & O 2 3 Math CS 3 4 Math Pure Math 2 5 Math Stats 14 GROUP BY with ROLLUP / CUBE

The ROLLUP operator produces a result set where the aggregate function is applied to each level of the GROUP BY hierachy. - The ROLLUP operator is NOT implemented in SQLite - Useful for making reports with totals and subtotals

SELECT col1, col2, ..., aggregate_function FROM table_name GROUP BY col1, col2, ... WITH ROLLUP;Example: What are the number of Stats Club members in each faculty and major, including subtotals?

SELECT faculty, major, COUNT(*) AS num_members FROM member GROUP BY faculty, major WITH ROLLUP;faculty major num_members 0 Art Econ 1 1 Art None 1 2 Math Act Sci 10 3 Math Applied Math 2 4 Math C & O 2 5 Math CS 3 6 Math Pure Math 2 7 Math Stats 14 8 Math None 33 9 None None 34 Note that for Art and Math in faculty there is a row which has a NULL value. This is a total for those groups.

There is also one row with NULL values for faculty and major, this is the grand total of all members.

Quiz: Aggregation (GROUP BY Clause)

Q: What is the attendance for each Stats Club event?

Solutions are here

Joins

At times, we need information from multiple tables, to do this we need to join tables together. We can do this several ways:

- CROSS JOIN: The cartesian product of rows from each table.

- INNER JOIN: Join two tables on a join-predicate, losing rows when evaluated false/null.

-

OUTER JOIN: Retains each record for the table(s) even when it has no matching rows from the other table. The returning table has null values for missing records.

-

LEFT OUTER JOIN: Keep each record for first table but not the table it’s joining with.

-

RIGHT OUTER JOIN: Keep each record for second table but not the table it’s joining with.

-

FULL OUTER JOIN: Keep all record for all tables.

-

- NATURAL JOIN: Tables with the exact same column name and datatype are joined along that column.

CROSS JOIN

CROSS JOIN is the cartesian product of two tables

SELECT col1, col2, ... FROM table1 CROSS JOIN table2;- if table1 has n rows and table2 has m rows, then the result set has n * m rows

We can also get a CROSS JOIN by listing the tables seperated by a ‘,’

SELECT col1, col2, ... FROM table1, table2;Example: Suppose we are creating a games tourtament between Stats Club members where every member play every other member once. How can we create such a table?

SELECT m1.name AS Player_1, m2.name AS Player_2 FROM member AS m1 CROSS JOIN member AS m2;Player_1 Player_2 0 Darrell Aucoin Darrell Aucoin 1 Darrell Aucoin Fred E. Finch 2 Darrell Aucoin Ryan T. Luby 3 Darrell Aucoin Billy L. Hunter 4 Darrell Aucoin John J. Oquendo 5 Darrell Aucoin Stephanie R. Matthews 6 Darrell Aucoin Robert B. Williams 7 Darrell Aucoin Austin K. Gilliard 8 Darrell Aucoin James M. Eddings 9 Darrell Aucoin Elaine S. Ott 10 Darrell Aucoin James A. Foxt 11 Darrell Aucoin Daniel J. Moore 12 Darrell Aucoin Kelly S. Ferguson 13 Darrell Aucoin Joseph L. Wood 14 Darrell Aucoin Vivian R. Donley 15 Darrell Aucoin Frances A. Miller 16 Darrell Aucoin Mina W. Lawrence 17 Darrell Aucoin Phillip C. Mascarenas 18 Darrell Aucoin Jeff M. Wright 19 Darrell Aucoin Deborah D. Helfrich 20 Darrell Aucoin Nancy P. Jackson 21 Darrell Aucoin Bobbie D. Mathews 22 Darrell Aucoin Arnold J. Fuller 23 Darrell Aucoin Melvin O. Martin 24 Darrell Aucoin Ralph L. Waldrop 25 Darrell Aucoin Mildred F. Hottinger 26 Darrell Aucoin Tameika M. McMaster 27 Darrell Aucoin Melissa R. Anderson 28 Darrell Aucoin Janelle T. Smith 29 Darrell Aucoin Ann W. McLaughlin ... ... ... 1126 Patrick Robertson John J. Oquendo 1127 Patrick Robertson Stephanie R. Matthews 1128 Patrick Robertson Robert B. Williams 1129 Patrick Robertson Austin K. Gilliard 1130 Patrick Robertson James M. Eddings 1131 Patrick Robertson Elaine S. Ott 1132 Patrick Robertson James A. Foxt 1133 Patrick Robertson Daniel J. Moore 1134 Patrick Robertson Kelly S. Ferguson 1135 Patrick Robertson Joseph L. Wood 1136 Patrick Robertson Vivian R. Donley 1137 Patrick Robertson Frances A. Miller 1138 Patrick Robertson Mina W. Lawrence 1139 Patrick Robertson Phillip C. Mascarenas 1140 Patrick Robertson Jeff M. Wright 1141 Patrick Robertson Deborah D. Helfrich 1142 Patrick Robertson Nancy P. Jackson 1143 Patrick Robertson Bobbie D. Mathews 1144 Patrick Robertson Arnold J. Fuller 1145 Patrick Robertson Melvin O. Martin 1146 Patrick Robertson Ralph L. Waldrop 1147 Patrick Robertson Mildred F. Hottinger 1148 Patrick Robertson Tameika M. McMaster 1149 Patrick Robertson Melissa R. Anderson 1150 Patrick Robertson Janelle T. Smith 1151 Patrick Robertson Ann W. McLaughlin 1152 Patrick Robertson Judith B. Gibbons 1153 Patrick Robertson Ruben Lamb 1154 Patrick Robertson Dominick Byrd 1155 Patrick Robertson Patrick Robertson 1156 rows × 2 columns

However we have have players playing themselves, and rounds with the same players in opposite roles. We just need to filter these out.

SELECT m1.name AS Player_1, m2.name AS Player_2 FROM member AS m1 CROSS JOIN member AS m2 WHERE m1.name > m2.name;Player_1 Player_2 0 Darrell Aucoin Billy L. Hunter 1 Darrell Aucoin Austin K. Gilliard 2 Darrell Aucoin Daniel J. Moore 3 Darrell Aucoin Bobbie D. Mathews 4 Darrell Aucoin Arnold J. Fuller 5 Darrell Aucoin Ann W. McLaughlin 6 Fred E. Finch Darrell Aucoin 7 Fred E. Finch Billy L. Hunter 8 Fred E. Finch Austin K. Gilliard 9 Fred E. Finch Elaine S. Ott 10 Fred E. Finch Daniel J. Moore 11 Fred E. Finch Frances A. Miller 12 Fred E. Finch Deborah D. Helfrich 13 Fred E. Finch Bobbie D. Mathews 14 Fred E. Finch Arnold J. Fuller 15 Fred E. Finch Ann W. McLaughlin 16 Fred E. Finch Dominick Byrd 17 Ryan T. Luby Darrell Aucoin 18 Ryan T. Luby Fred E. Finch 19 Ryan T. Luby Billy L. Hunter 20 Ryan T. Luby John J. Oquendo 21 Ryan T. Luby Robert B. Williams 22 Ryan T. Luby Austin K. Gilliard 23 Ryan T. Luby James M. Eddings 24 Ryan T. Luby Elaine S. Ott 25 Ryan T. Luby James A. Foxt 26 Ryan T. Luby Daniel J. Moore 27 Ryan T. Luby Kelly S. Ferguson 28 Ryan T. Luby Joseph L. Wood 29 Ryan T. Luby Frances A. Miller ... ... ... 531 Dominick Byrd Daniel J. Moore 532 Dominick Byrd Deborah D. Helfrich 533 Dominick Byrd Bobbie D. Mathews 534 Dominick Byrd Arnold J. Fuller 535 Dominick Byrd Ann W. McLaughlin 536 Patrick Robertson Darrell Aucoin 537 Patrick Robertson Fred E. Finch 538 Patrick Robertson Billy L. Hunter 539 Patrick Robertson John J. Oquendo 540 Patrick Robertson Austin K. Gilliard 541 Patrick Robertson James M. Eddings 542 Patrick Robertson Elaine S. Ott 543 Patrick Robertson James A. Foxt 544 Patrick Robertson Daniel J. Moore 545 Patrick Robertson Kelly S. Ferguson 546 Patrick Robertson Joseph L. Wood 547 Patrick Robertson Frances A. Miller 548 Patrick Robertson Mina W. Lawrence 549 Patrick Robertson Jeff M. Wright 550 Patrick Robertson Deborah D. Helfrich 551 Patrick Robertson Nancy P. Jackson 552 Patrick Robertson Bobbie D. Mathews 553 Patrick Robertson Arnold J. Fuller 554 Patrick Robertson Melvin O. Martin 555 Patrick Robertson Mildred F. Hottinger 556 Patrick Robertson Melissa R. Anderson 557 Patrick Robertson Janelle T. Smith 558 Patrick Robertson Ann W. McLaughlin 559 Patrick Robertson Judith B. Gibbons 560 Patrick Robertson Dominick Byrd 561 rows × 2 columns

INNER JOIN

INNER JOIN Joins two tables where the join condition returns true. Discarded when returning false or NULL.

SELECT col1, col2, ... FROM table1 INNER JOIN table2 ON condition;ON Clause

The ON clause specifies the join condition:

-

The ON clause can use a multiple set of conditions connected by AND, OR

-

USING(

) can also be used if both tables have the same column name and type -

Some SQL implementations constructs the ON clause from the WHERE clause (DB2)

- filtering by the WHERE clause gives the same result but in some implementations will product an intermediate cross product of tables (making the query slower)

Example: How many events does each member attend?

- Note that this query does not include members who attended no events

- Query is ordered by events_attended to show a comparison with a latter query

SELECT m.name, COUNT(a.event) AS events_attended FROM member AS m INNER JOIN attendance AS a ON m.quest_id = a.member GROUP BY m.name ORDER BY events_attended;name events_attended 0 John J. Oquendo 1 1 James M. Eddings 2 2 Melissa R. Anderson 2 3 Melvin O. Martin 2 4 Mina W. Lawrence 2 5 Ann W. McLaughlin 3 6 Bobbie D. Mathews 3 7 Janelle T. Smith 3 8 Mildred F. Hottinger 3 9 Phillip C. Mascarenas 3 10 Ryan T. Luby 3 11 Vivian R. Donley 3 12 Arnold J. Fuller 4 13 Austin K. Gilliard 4 14 Jeff M. Wright 4 15 Kelly S. Ferguson 4 16 Nancy P. Jackson 4 17 Ralph L. Waldrop 4 18 Ruben Lamb 4 19 Billy L. Hunter 5 20 Deborah D. Helfrich 5 21 Dominick Byrd 5 22 Frances A. Miller 5 23 Fred E. Finch 5 24 Joseph L. Wood 5 25 Judith B. Gibbons 5 26 Robert B. Williams 5 27 Stephanie R. Matthews 5 28 Tameika M. McMaster 5 29 Elaine S. Ott 6 30 Daniel J. Moore 7 31 Patrick Robertson 7 OUTER JOIN

OUTER JOIN A join that returns all rows for 1 or 2 tables, even when there is no corresponding value. In these cases, NULL values are entered for these corresponding rows.

There are 3 types of OUTER JOINs:

-

LEFT OUTER JOIN: An OUTER JOIN returning all rows of the table first mentioned.

-

RIGHT OUTER JOIN: An OUTER JOIN returning all rows of the table second mentioned.

-

FULL OUTER JOIN: An OUTER JOIN returning all rows of both tables.

- Only LEFT OUTER JOIN is implemented in SQLite

Example: What are the names of Stat Club members and how many events they attended?

SELECT m.name, COUNT(a.event) AS events_attended FROM member AS m LEFT OUTER JOIN attendance AS a ON m.quest_id = a.member GROUP BY m.name ORDER BY events_attended;name events_attended 0 Darrell Aucoin 0 1 James A. Foxt 0 2 John J. Oquendo 1 3 James M. Eddings 2 4 Melissa R. Anderson 2 5 Melvin O. Martin 2 6 Mina W. Lawrence 2 7 Ann W. McLaughlin 3 8 Bobbie D. Mathews 3 9 Janelle T. Smith 3 10 Mildred F. Hottinger 3 11 Phillip C. Mascarenas 3 12 Ryan T. Luby 3 13 Vivian R. Donley 3 14 Arnold J. Fuller 4 15 Austin K. Gilliard 4 16 Jeff M. Wright 4 17 Kelly S. Ferguson 4 18 Nancy P. Jackson 4 19 Ralph L. Waldrop 4 20 Ruben Lamb 4 21 Billy L. Hunter 5 22 Deborah D. Helfrich 5 23 Dominick Byrd 5 24 Frances A. Miller 5 25 Fred E. Finch 5 26 Joseph L. Wood 5 27 Judith B. Gibbons 5 28 Robert B. Williams 5 29 Stephanie R. Matthews 5 30 Tameika M. McMaster 5 31 Elaine S. Ott 6 32 Daniel J. Moore 7 33 Patrick Robertson 7 Natural Join

NATURAL JOIN A join condition that lets the server decide on the join conditions based on the same column names and types across columns for the tables.

Example: What are the position and duties of each Stats Club exec?

SELECT e.name, e.position, ep.duties FROM exec AS e NATURAL JOIN exec_position AS ep;name position duties 0 Darrell Aucoin President To be aware of MathSoc's Policies and Bylaws i... 1 Darrell Aucoin President To call and preside over general meetings. 2 Darrell Aucoin President To manage the executive team and the strategic... 3 Darrell Aucoin President To post announcements of all club meetings, an... 4 Judith B. Gibbons Events To assist the president and other vice-preside... 5 Judith B. Gibbons Events To chair the organization and promotion of lea... 6 Lamar Roy Finance To ensure membership fees are collected and ma... 7 Lamar Roy Finance To keep an up-to-date record of financial tran... 8 Lamar Roy Finance To prepare a summary of the financial records ... 9 Lamar Roy Finance To prepare the budget at the beginning of term. 10 Lamar Roy Finance To volunteer as president in the absence of th... 11 Gilberto Cross Events To assist the president and other vice-preside... 12 Gilberto Cross Events To chair the organization and promotion of lea... 13 Melba Lane President To be aware of MathSoc's Policies and Bylaws i... 14 Melba Lane President To call and preside over general meetings. 15 Melba Lane President To manage the executive team and the strategic... 16 Melba Lane President To post announcements of all club meetings, an... 17 Ruben Lamb Technology Maintain and update the club website. 18 Ruben Lamb Technology Maintain any hardware, software, or technology... 19 Ruben Lamb Technology Perform the duties of a Vice President - Event... 20 Patrick Robertson Events To assist the president and other vice-preside... 21 Patrick Robertson Events To chair the organization and promotion of lea... 22 Dominick Byrd Events To assist the president and other vice-preside... 23 Dominick Byrd Events To chair the organization and promotion of lea... Quiz: Joining Tables

Q: What are the email addresses and phone numbers of stats club execs who are in change or organizing at least one event?

Solutions are here

Subqueries

Subqueries are queries contained in queries. These subqueries are contained in ‘(‘, ‘)’

There are two types of subqueries:

-

Non-Correlated Subqueries: Can be run independently of the larger query.

-

Correlated Subqueries: Must be run concurrently with the outer query. They are dependent on the outer query.

Non-Correlated Subqueries

Non-Correlated Subquery: Any valid query within query that if executed by itself will produce a result (including empty set). These are enclosed in ‘(‘, ‘)’ in FROM, WHERE, or HAVING clauses.

Example: What Stats Club execs are in charge of making posters?

SELECT e.name, e.position FROM exec AS e WHERE e.questid IN (SELECT poster FROM event);name position 0 Dominick Byrd Events 1 Gilberto Cross Events 2 Judith B. Gibbons Events 3 Patrick Robertson Events Correlated Subqueries

Correlated Subquery makes references to it’s containing query, executing it for every candidate row referenced. - Correlated subqueries can appear in the SELECT, WHERE, or HAVING clauses.

Example: What majors are the current Stats Club execs enrolled in? - The member table has the information on majors but exec has the information on execs

SELECT name, position, (SELECT faculty FROM member AS m WHERE m.quest_id = e.questid) AS faulty, (SELECT major FROM member AS m WHERE m.quest_id = e.questid) AS major FROM exec AS e;name position faulty major 0 Darrell Aucoin President Math Stats 1 Judith B. Gibbons Events Math Act Sci 2 Lamar Roy Finance None None 3 Gilberto Cross Events None None 4 Melba Lane President None None 5 Ruben Lamb Technology Math Act Sci 6 Hannah Mason SeniorAdvisor None None 7 Patrick Robertson Events Math Stats 8 Dominick Byrd Events Math Pure Math Correlated vs Non-Correlated

-

Correlated subquery is dependent on outer query, non-correlated is independent.

-

Correlated subquery is executed concurrently with outer query, non- correlated is executed before.

-

In general, for speed of execution:

Correlated subquery < Non-Correlated subquery < Joins Quiz: Subqueries

Q: Where can a Non-Correlated subquery can be placed?

Q: Where can a Correlated subquery can be placed?

Q: Using a non-correlated subquery, what are the names, locations, and descriptions of events that served pizza? 1. Break the problem into smaller pieces: What are the events that served pizza? 2. Only retrieve values from the table

eventthatevent.namematches those valuesSolutions are here

Set Operations

Set operations create a combination of rows from 2 tables into one result set. - The tables (or the projection of those tables) have to have the same number of columns and datatypes - if one column appears in a table but not in another, supply the value NULL for the missing column

- UNION: For tables A and B, combines the rows for A and B into one result set.

- INTERSECT: For tables A and B, returns the rows for A and B that they have in common.

- Difference (EXCEPT): For tables A and B, return only the rows in A that are not in common with B.

UNION

UNION operator: Addition of one result set to another result set with the same number of attributes and types.

SELECT ... FROM ... UNION [ALL] SELECT ... FROM ...- Just UNION removes duplicates, while UNION ALL keeps all rows from both result sets.

Example: Suppose are you need to contact everyone involved in Stats Club: members and execs. What are the names, email addresses, and phone numbers (if you have them) of all Stats Club members and execs?

SELECT name, email, NULL AS phone FROM member UNION SELECT name, email, phone FROM exec;name email phone 0 Ann W. McLaughlin ann@uwaterloo.ca None 1 Arnold J. Fuller arnold@uwaterloo.ca None 2 Austin K. Gilliard austin@uwaterloo.ca None 3 Billy L. Hunter billy@uwaterloo.ca None 4 Bobbie D. Mathews bobbie@uwaterloo.ca None 5 Daniel J. Moore daniel@uwaterloo.ca None 6 Darrell Aucoin darrell.aucoin@gmail.com None 7 Darrell Aucoin darrell.aucoin@gmail.com 519-555-1424 8 Deborah D. Helfrich deborah@uwaterloo.ca None 9 Dominick Byrd dominick@uwaterloo.ca None 10 Dominick Byrd dominick@uwaterloo.ca 519-555-2325 11 Elaine S. Ott elaine@uwaterloo.ca None 12 Frances A. Miller frances@uwaterloo.ca None 13 Fred E. Finch fred@uwaterloo.ca None 14 Gilberto Cross gilberto@uwaterloo.ca 519-555-3453 15 Hannah Mason hannah@uwaterloo.ca 519-555-2342 16 James A. Foxt james.fox@uwaterloo.ca None 17 James M. Eddings james@uwaterloo.ca None 18 Janelle T. Smith janelle@uwaterloo.ca None 19 Jeff M. Wright jeff@uwaterloo.ca None 20 John J. Oquendo john@uwaterloo.ca None 21 Joseph L. Wood joseph@uwaterloo.ca None 22 Judith B. Gibbons judith@uwaterloo.ca None 23 Judith B. Gibbons judith@uwaterloo.ca 519-555-2343 24 Kelly S. Ferguson kelly@uwaterloo.ca None 25 Lamar Roy lamar@uwaterloo.ca 519-555-3432 26 Melba Lane melba@uwaterloo.ca 519-555-2322 27 Melissa R. Anderson melissa@uwaterloo.ca None 28 Melvin O. Martin melvin@uwaterloo.ca None 29 Mildred F. Hottinger mildred@uwaterloo.ca None 30 Mina W. Lawrence mina@uwaterloo.ca None 31 Nancy P. Jackson nancy@uwaterloo.ca None 32 Patrick Robertson patrick@uwaterloo.ca None 33 Patrick Robertson patrick@uwaterloo.ca 519-555-2312 34 Phillip C. Mascarenas phillip@uwaterloo.ca None 35 Ralph L. Waldrop ralph@uwaterloo.ca None 36 Robert B. Williams robert@uwaterloo.ca None 37 Ruben Lamb ruben@uwaterloo.ca None 38 Ruben Lamb ruben@uwaterloo.ca 519-555-5232 39 Ryan T. Luby ryan@uwaterloo.ca None 40 Stephanie R. Matthews step@uwaterloo.ca None 41 Tameika M. McMaster tameika@uwaterloo.ca None 42 Vivian R. Donley vivian@uwaterloo.ca None Intersection (INTERSECT Operator)

INTERSECT operator: Returns only tuples that are in common between two result sets. Result sets must be equal in number and type of attributes.

SELECT ... FROM ... INTERSECT SELECT ... FROM ...;Example: What Stats Club execs have also signed up as Stats Club members?

SELECT name, quest_id FROM member INTERSECT SELECT name, questid FROM exec;name quest_id 0 Darrell Aucoin darrell 1 Dominick Byrd dominick 2 Judith B. Gibbons judith 3 Patrick Robertson patrick 4 Ruben Lamb ruben Difference (EXCEPT Operator)

EXCEPT operator: Returns the first result set minus anything it has in common with the second result set.

SELECT ... FROM ... EXCEPT [ALL] SELECT ... FROM ...-

Just EXCEPT uses set theory version of minus.

- If B has a row in common with A then all rows matching that row is removed

-

The optional ALL uses the bag semantics version.

- If B has a row in common with A then only the number of common rows in B rows matching that row is removed

Example: What Stats Club members are not execs?

SELECT name, quest_id FROM member EXCEPT SELECT name, questid FROM exec;name quest_id 0 Ann W. McLaughlin ann 1 Arnold J. Fuller arnold 2 Austin K. Gilliard austin 3 Billy L. Hunter billy 4 Bobbie D. Mathews bobbie 5 Daniel J. Moore daniel 6 Deborah D. Helfrich deborah 7 Elaine S. Ott elaine 8 Frances A. Miller frances 9 Fred E. Finch fred 10 James A. Foxt james.fox 11 James M. Eddings james 12 Janelle T. Smith janelle 13 Jeff M. Wright jeff 14 John J. Oquendo john 15 Joseph L. Wood joseph 16 Kelly S. Ferguson kelly 17 Melissa R. Anderson melissa 18 Melvin O. Martin melvin 19 Mildred F. Hottinger mildred 20 Mina W. Lawrence mina 21 Nancy P. Jackson nancy 22 Phillip C. Mascarenas phillip 23 Ralph L. Waldrop ralph 24 Robert B. Williams robert 25 Ryan T. Luby ryan 26 Stephanie R. Matthews step 27 Tameika M. McMaster tameika 28 Vivian R. Donley vivian WITH Clause

WITH clause: Makes a non-correlated subquery look like a table in the executed statement:

- Increases readability of the query as well as ensure that if it is used in several different places, it will only be executed once

WITH subquery_name [(colname1, ...)] AS (SELECT ...), subquery_name2 [(colname1, ...)] AS (SELECT ...) SELECT ...Example: Suppose you are doing a report for MathSoc detailing Stats Club events, budget, and expenses.

WITH cost (event, expenses) AS (SELECT event, SUM(price) FROM expenses GROUP BY event) SELECT e.name, e.type, e.budget, cost.expenses FROM event AS e INNER JOIN cost ON e.name = cost.event;name type budget expenses 0 BOT social 90.0 58.57 1 EOT social 160.0 160.65 2 Intro to Hadoop educational 90.0 96.81 3 Intro to SQL educational 90.0 102.77 4 Intro to SQL 2 educational 200 62.63 5 Prof Talk educational 90.0 82.87 6 Prof Talk 2 educational 90.0 77.21 7 Prof Talk 3 educational 90.0 75.68 Quiz: WITH Clause (HARD)

Q: MathSoc only provides a maximum cap on social events based on the formula below. What is the max cap for social expenses and is Stats Club over this limit? - Membership fee for Stats Club is 2 dollars

Social expenses = max{250, (MathSoc_Members) * (5 + (Member_fee))} Social expenses = max{250, (MathSoc_Members) * 7} Break the problem into smaller problems: - What are the total expenses for social events? - What is the max budget for social events?

Smaller Problems: - What are the total expenses for social events? - What events are social events?

- What is the max cap for social events?

- What is the result of the formula

(MathSoc Members) * 7 - What are the number of Stats Club members who are in the Math Faculty (aka MathSoc members)? - How do we find the max value between this number and 250?

#### Think about how to solve this for 5 mins

Solutions are here

CASE Expressions

CASE expressions: Similar to a series of if else statements executed for every entry in a table. A new value is returned for every row in the table.

CASE [column] WHEN condition1 THEN result1 WHEN condition2 THEN result2 ... WHEN condition_n THEN result_n [ELSE result] END-

The result can be of any datatype or the result of a correlated or non- correlated subquery (if the result is a single value)

-

CASE expressions are performed by themselves in the SELECT clause or within a function or aggregate function

- CASE expressions within aggregate functions allow us to do counts, sums, averages, etc. of particular occurrences

Example: Suppose like before we are preparing a report for MathSoc, but now we want to give a warning if the event is over budget or not.

WITH cost (event, expenses) AS (SELECT event, SUM(price) FROM expenses GROUP BY event) SELECT e.name, e.type, e.budget, cost.expenses, CASE WHEN e.budget - cost.expenses < 0 THEN 'Over budget' ELSE NULL END AS warning FROM event AS e INNER JOIN cost ON e.name = cost.event;name type budget expenses warning 0 BOT social 90 58.57 None 1 EOT social 160 160.65 Over budget 2 Intro to Hadoop educational 90 96.81 Over budget 3 Intro to SQL educational 90 102.77 Over budget 4 Intro to SQL 2 educational 200 62.63 None 5 Prof Talk educational 90 82.87 None 6 Prof Talk 2 educational 90 77.21 None 7 Prof Talk 3 educational 90 75.68 None Quiz: CASE Expressions

Q: Suppose we are interested in the healthiness of our food options at Stats Club events. A score of various foods was given below. What is the average ‘healthiness’ of Stats Club events?

Food Score donuts -2 pop -2 fries -2 pizza -1 cookies -1 coffee 0 water 2 meals 2 veggie platter 3 Solutions are here

ORDER BY Clause

ORDER BY Clause: Rearranges the rows of a result set according to a tuple of columns.

SELECT column_list FROM table_name ORDER BY column1, column2, .. columnN [ASC | DESC];ASCis ascending (default)DESCis descendingExample: What is the membership list ordered by name?

SELECT name FROM member ORDER BY name;name 0 Ann W. McLaughlin 1 Arnold J. Fuller 2 Austin K. Gilliard 3 Billy L. Hunter 4 Bobbie D. Mathews 5 Daniel J. Moore 6 Darrell Aucoin 7 Deborah D. Helfrich 8 Dominick Byrd 9 Elaine S. Ott 10 Frances A. Miller 11 Fred E. Finch 12 James A. Foxt 13 James M. Eddings 14 Janelle T. Smith 15 Jeff M. Wright 16 John J. Oquendo 17 Joseph L. Wood 18 Judith B. Gibbons 19 Kelly S. Ferguson 20 Melissa R. Anderson 21 Melvin O. Martin 22 Mildred F. Hottinger 23 Mina W. Lawrence 24 Nancy P. Jackson 25 Patrick Robertson 26 Phillip C. Mascarenas 27 Ralph L. Waldrop 28 Robert B. Williams 29 Ruben Lamb 30 Ryan T. Luby 31 Stephanie R. Matthews 32 Tameika M. McMaster 33 Vivian R. Donley LIMIT Clause

LIMIT Clause: Restrict the result set to the first n of rows.

SELECT column1, column2, columnN FROM table_name LIMIT no_of_rows;- We can also offset where the restriction begins

SELECT column1, column2, columnN FROM table_name LIMIT no_of_rows OFFSET row_num;Example: What is the first 10 members ordered by name?

SELECT name FROM member ORDER BY name LIMIT 10;name 0 Ann W. McLaughlin 1 Arnold J. Fuller 2 Austin K. Gilliard 3 Billy L. Hunter 4 Bobbie D. Mathews 5 Daniel J. Moore 6 Darrell Aucoin 7 Deborah D. Helfrich 8 Dominick Byrd 9 Elaine S. Ott Quiz: ORDER BY and LIMIT Clause

Q: What are the top 10 highest priced items in expenses?

Solutions are here

Further Practice Questions?

Quiz: Practice Questions

Q: What events have dates specified?

Q: What events don’t have dates specified?

Q: What Stats Club members are in Stats, Act Sci, or CS? - Recall the

INpredicate operatorQ: What are the Stats Club exec positions? - Avoid duplication of positions

Q: How many different Stats Club exec positions are there? - Note that we can use DISTINCT within aggregate functions

Topics Not Covered

- How to create a database

- Creating tables

- Creating constraints

- Window functions

- Indices

- Views

- How to insert, delete, alter, drop, etc. data in a table

-

R Programming Tutorial

This R programming tutorial was orignally created by the uWaterloo stats club and MSFA with the purpose of providing the basic information to quickly get students hands dirty using R. Fine the original here

Contents:

R Programming 101 (Beginner Tutorial): Introduction to R Presentation

Presentation in html / Presentation in pdf / Source (r markdown)R Programming Reference: complilation of useful information and code snippets

Reference in html / Reference in pdf / Source (r markdown) ## Useful Resources:Learn more R using swirl

R for Statisitical Computing

Download R from CRAN and install

Recommended supplement but not necessary: RStudio from http://www.rstudio.com/products/rstudio/download/

- R Studio: A (very matlab like) IDE for R

Comments

Comments are typed with hashtags ‘#’

# This is a comment cat("This is not a comment")No block comments. So sad. =(

Data Types

### Integers & Numerics Examples: 1,2.0,1.1,pi

c(1,2.0,1.1,pi)Infcan also be used in calculations

1/InfComplex Numbers

We can even use complex numbers.

complex(real = 1, imaginary = 2) 8+6iCharacters

Example: ‘One’, ‘1’, ‘pi’

c('One', '1', 'pi')Boolian (Logical) Values

Boolian values can take only two values:

TRUE(T) orFALSE(F).c(TRUE, FALSE, TRUE)Factors

A factor is a categorical variable that can take on only a finite set of values. i.e. Sex, Faculty in University

factor(c('Male','Female','Male','Male'))Everything is an object, including functions!

Vectors

Most common objects are called vectors.

Examples: vector of numbersa1 <- c(1,2,3) a1a2 <- c('one','two','three') a2a3 <- c('1','2','3') a3You can also create a range of values using

start:end4:10 4:-3 0.1:4Basic Numerical Operations: +, -, *, /, ^

Numerical operations are: +, -, *, /, ^

- These operate elementwise between vectors.Operator Description + Addition - Subtraction * Multiplication / Division ^ Power c(1,2,3) * c(4,5,6)Note: They don’t have to have the same length. If they don’t then the vector will recycle though the shorter vector. The longer has to be a multiple of the shorter vector.

c(1,2,3) ^ c(1,2,3,4,5,6)Logical Operators

- Return a boolan value of

TRUEorFALSE

Operator Description < Less than > Greater than <= Less than or equal to >= Greater than or equal to == Equal to != Not equal to | Elementwise Or & Elementwise And || Or && And c(TRUE, FALSE) | c(FALSE, FALSE)c(1,2,3) < c(2,1,4)Pro Tip: When interacting with number, boolians are converted to an integer: 0, or 1.

Type check

is.(typename)Example:

is.vector,is.integer,is.numeric,is.data.frame,is.matrix- Not sure which type? Use

typeof()!

is.numeric(a1) is.vector(a1) is.data.frame(a1)Assignment Operator

Assignment can come in 3 forms:

var_name <- evaluation

var_name = evaluation

evaluation -> var_namex <- 1 xBe careful: <- is not the same as < -

x < -1y = "string" y"This isn't used much" -> z zConcatenating Vectors

They are different vectors! To concatenate two vectors, use

c(vector.1, vector.2)b12 <- c(a1,a2) b12b23 <- c(a2,a3) b23 b13 <- c(a1,a3) b13b21 <- c(a2,a1) b21Notice that when combined with characters, numerics are changed into characters automatically. So

b23 == b21.b23 == b21b123 <- c(a1,a2,a3)Dot Product

To use dot product of two vectors (instead of elementwise) use

%*%a1 %*% a1 c(1,4,5) %*% c(6,7,2)##Exercise 1. What are the datatypes available in R? 2. What datatype would the vector

c(1,2,"three")be? 3. What is the vectorc(3,4,5,6)to the power of 4? 4. What elements ofc(3,4,5,6)greater than 4?##Answer 1. What are the datatypes available in R? - Numeric - Integer - Complex - Character - Boolian - Factor

- What datatype would the vector

c(1,2,"three")be?- Character

class(c(1,2,"three"))- What is the vector

c(3,4,5,6)to the power of 4?

c(3,4,5,6) ^ 4- What elements of

c(3,4,5,6)greater than 4?

c(3,4,5,6) > 4Lists

Different from vectors, they allow us to put multiple structures in a list.

- Useful when we need to store a list of objects with different datatypesl12 <- list(a1,a2) l12l23 <- list(a2,a3) l23 l13 <- list(a1,a3) l13Notice they are stored in two ‘different arrays’

as.vector,as.listcan interchange list to vectors and vectors to list via as.vector and as.listas.vector(l23) as.list(a1)Exercise

- Generate a vector of 1 to 10, a list of characters 2.1 to 2.5 separated by 0.1

- Add the list to the vector and return a vector

- Define 2 vectors, x1, x2, by using rnorm(7,mean = 13, sd = 5)

- Do the inner product of x1,x2

Answer

q1 = 1:10 #Question 1 q1c = as.list(as.character(seq(2.1,2.5,0.1))) q1 + as.numeric(q1c) # Question 2 x1 = rnorm(7,mean = 13, sd = 5) #Question 3 x2 = rnorm(7,mean = 13, sd = 5) x1 %*% x2 #Question 4Matrix

- Each column needs to contain same type.

- Like a high level vector.

M1 <- matrix(c(1,2,3,4,5,6,7,8,9),nrow=3,ncol=3) M1 M2 <- matrix(9:1 ,3 ,3) M2M3 <- matrix(c(a1,a2),2,3) M3Data Frames

- Generalised matrix. Now we can store different data types in different columns! =)

- Like high level list

df1 <- data.frame(a1,a2,a3) df1Attributes

Attribute Description namesNames of an object dimnamesNames of the dimensions of an object dimDimension of an object classClass of an object lengthLength of an object length(a1)names(a1) = c("a","b","c") a1names(df1) = c("var_1","var_2","var_3") df1dim(M1)Data Manipulation

Indices, just like linear algebra, for vectors, specify thy entry, and matrix row first then column.

a1[2] # Second entry M1[1,2] #First row second column df1[2,3] # Second row third columnM1[1,] # First row M1[,3] # Third columnYou can also Boolian values to get a subset of values:

a1[a1 <= 2]Accessing the elements of a list is slightly different. Use double

[[]]notation:l13[[1]]Assigning names to data.frame and matrices

rownames(M1) <- c('Ein','Zwei','Drei') colnames(M1) <- c('Un','Deux','Trois') M1rownames(df1) <- c('Uno','Dos','Tres') colnames(df1) <- c('yi','er','san') df1Adding new rows or columns into matrix or data.frame

rbind(): Add new row to rbind, cbindM1.rbind <- rbind(M1,M1) M1.rbindM2.rbind <- rbind(M1,M2) # Notice the names of columns and rows M2.rbindM1.cbind <- cbind(M1,M1) M1.cbindCalling by Column Names

df1$yi df1$er df1$sanReading csv/delim files

read.file_type(file = "Name.file_type", header = TRUE, sep = "")Useful functions

attach(iris) head(iris)summary(iris)print(iris)apply, sapply, lapply

- sapply, lapply takes in vectors/list

- sapply(lapply) returns a vector(list) of same length

WARNING: DO NOT USE ANY OF lapply OR sapply under normal circumstances

sapply(iris$Sepal.Width,floor)lapply(iris$Sepal.width,floor)floor(iris$Sepal.Width)Notice that this returns same thing as sapply, so there is no reason to use sapply under most of the cases.

applyis a function to apply a function to a matrix by row or column or both

apply(M2,1,min)# Minimum for each rowapply(M2,2,min)# Minimum for each columnapply(M2,c(1,2),min)# Minimum of all entries, same as min(M2)User define functions

Can predefine default value for argument(s) - Can take in vectors instead of scalars

random.walk <- function(n=1000,p=0.5, start = 0,min = 0, max = 1){ rand <- runif(n = n, min = min, max = max)/(max - min); steps <- sign(2*(rand - p)); out <- start + cumsum(steps); return(out) }plot(random.walk(),type = "l")Exercise

Use the iris dataset in R and build a matrix call iris.matrix with the followings:

1. Columns and rows of iris corresponds to columns and rows of iris.matrix

2. Change the Species column of iris.matrix into the following indicator variables

- 1 - setosa, 2 - versicolor, 3 - virginica

3. Get the mean of every column except for Species column

4. Take away respective mean from each column except for the Species column

5. Produce the summary of the new matrixFuther Notes

browser()is useful to help debugging, talk about this later?function_nameis useful to look up what a function does

Interesting reads:

ggplot2: Plot package based around “the grammer of graphics”data.table: Package showcasing a faster version of data.frameNext Steps:

R Programming Reference: http://rpubs.com/uwaterloodatateam/r-programming-reference

Contribute useful code snippets: https://github.com/uWaterlooDataTeam/r-programming-tutorial

-

How to Install the Python Data Science Stack on Linux or a Remote Linux Server

Beginners Tutorial for How to Get Started Doing Data Science using Servers provided us with a background of why using servers are useful for data scientists and how setup and connect to a server using SSH. In this section we will learn how to install python and the common packages data scientists use with python which we call the PyDataScience Stack. We will also learn how to setup and use Jupyter get started with data analysis with python. This guide should also work for people using Mac and Linux on their local machines.

Anaconda Distribution

Continuum Analytics has developed a Anaconda Distribution which provides an installer and package management for the PyDataScience stack. While being free to use (even for commercial), it is not fully open sourced and their script (.sh file) includes precompiled binary code. Precompiled binary means that there was original source code used to generate the code you would be running. It is not recommended to blindly use precompiled code since the content of the original source code is unknown and can create security flaws on your server. For this reason is better to install packages on our own or using a fully open sourced package manager. If you choose to take the simpler (but not better route) of using the Anaconda distribution the specific instructions for your system (Linux, Mac and Windows) can be found on the downloads page

Installing the PyDataScience Framework Manually

First let us install some common utilities which are useful for using servers:

sudo apt-get -y install git curl vim tmux htop rangerNext let us install python, python development and python-pip:

sudo apt-get -y install python2.7 python-dev python-pipWe an also install some optional python libraries which are popularly used:

sudo apt-get -y install python-serial python-setuptools python-smbusNext we need to set up virtual environments for python. A Virtual Environment is a tool to keep the dependencies required by different projects in separate places, by creating virtual Python environments for them. It solves the “Project X depends on version 1.x but, Project Y needs version 4.x” dilemma, and keeps your global site-packages directory clean and manageable. For example, you can work on a data science project which requires Django 1.3 while also maintaining a project which requires Django 1.0.

Let us get started by installing virtualenv using pip

pip install virtualenvIf that command gave you an error, then it might be necessary to run all pip commands as super user (

sudo pip install virtualenv) insteadNow lets create a folder in the home directory (~/) which will contain the Python executable files, and a copy of the pip library which you can use to install other packages. The name of the virtual environment (in this case, it is data-science) can be anything; omitting the name will place the files in the current directory instead.

cd ~/ mkdir venv pushd venv virtualenv data-science popdNow let us activate our virtual environment.

source ~/venv/data-science/bin/activateThe name of the current virtual environment will now appear on the left of the prompt (e.g. (venv)Your-Computer:your_project UserName$) to let you know that it’s active. From now on, any package that you install using pip will be placed in the venv folder, isolated from the global Python installation. This means that When the virtual environment is activated, when anything is installed using pip, it is installed in the virtual environment, not in your system’s python. This means you can have different version of libraries installed and if an installation breaks, you can simply delete the corresponding venv folder (data-science in this case) and start over without damaging the global Python installation.

If you want to deactivate the virtual environment, it is as simple as using the deactivate command:

deactivateInstalling the Required Python Packages

If the virtual environment is not active, we can activate it again:

source ~/venv/data-science/bin/activateNext let us upgrade our python setup tools and install compilation requirements in python:

pip install --upgrade setuptools pip install virtualenvwrapper pip install cython pip install noseNow that we have all of the requirements, let us begin by installing the SciPy stack

sudo apt-get -y install python-numpy python-scipy python-matplotlib ipython ipython-notebook python-pandas python-sympy python-noseIn the future you might also want to add the NeuroDebian repository for extra SciPy packages but for now let us move onto installing Jupyter notebook:

pip install jupyterJupyer notebook (or “notebooks”) are documents produced by the Jupyter Notebook App which contain both computer code (e.g. python) and rich text elements (paragraph, equations, figures, links, etc…). Notebook documents are both human-readable documents containing the analysis description and the results (figures, tables, etc..) as well as executable documents which can be run to perform data analysis.

Since some packages require matplotlib, we If you are on a Mac or Linux, and pip install matplotlib did not work, you are probably missing the supporting packages zlib, freetype2 and libpng. Let us install the requirements:

sudo apt-get -y install libfreetype6-dev libpng12-dev libjs-mathjax fonts-mathjax libgcrypt11-dev libxft-dev pip install matplotlibNext let us install the requirements for Seaborn, then install Seaborn itself, which is a very powerful statistical plotting library:

sudo apt-get install libatlas-base-dev gfortran pip install SeabornA very complimentary powerful statistics library is statsmodels

pip install statsmodelsLet us now install one of the most powerful and commonly use machine learning library scitKit-learn

pip install scikit-learnNow let us install some other python libraries which commonly used for data science:

pip install numexpr && pip install bottleneck && pip install pandas && pip install SQLAlchemy && pip install pyzmq && pip install jinja2 && pip install tornadoNext let us install two powerful packages for text processing: natural language toolkit and gensim: topic modelling for humans

pip install nltk pip install gensimWe have now installed the most popular python libraries used for data science. There are many more packages which can be installed if necessary, but we have installed enough to get started. If you would prefer to use a dark theme for Jupyter notebook, you can follow the next subsection or you can move onto install R and Rstudio.

Jupyter Notebook Custom Themes (Optional)

This step is optional. Just like any other development environment, with Jupyter notebook, you can change the theme, color scheme and syntax highlighting. I personally prefer dark themes such as Oceans16, you can follow the following steps to choose a different theme.

pip install git+https://github.com/dunovank/jupyter-themes.gitWe are going to install theme (-t) for ipython/jupyter version > 3.x (-J) with the toolbar (-T)

jupyter-theme -J -t oceans16 -TRunning Jupyter Notebook

Before we started running python code on our server, let us set some safety constraints to ensure that our code does not use all the CPU and memory resources. The first step is determining how much memory our system is using using the

freecommand:freeA sample output using the corn-syrup server from uWaterloo’s csclub is the following:

total used free shared buff/cache available Mem: 32947204 981132 201380 271260 31764692 31568324 Swap: 0 0 0Under the total column, the first number is the total memory available in kbytes. This means that there is 32947204 kbytes of RAM total or about 32 GB. It is generally suggested to not exceed 75% of memory to ensure the system does not hang or freeze, but you are free to set the limit to what ever you think is best. You can limit the memory using the following command

ulimit -m 20000000 ulimit -v 20000000

The

-mis for the total memory, and the-vis for the virtual memory. In this example, the limit is set to 20000000 kbytes, which is equivalent to around 20GB.You can see the limits by the following command:

ulimit -aA sample output is the following:

core file size (blocks, -c) 0 data seg size (kbytes, -d) unlimited scheduling priority (-e) 0 file size (blocks, -f) unlimited pending signals (-i) 13724 max locked memory (kbytes, -l) 64 max memory size (kbytes, -m) 20000000 open files (-n) 1024 pipe size (512 bytes, -p) 8 POSIX message queues (bytes, -q) 819200 real-time priority (-r) 0 stack size (kbytes, -s) 8192 cpu time (seconds, -t) unlimited max user processes (-u) 13724 virtual memory (kbytes, -v) 20000000 file locks (-x) unlimitedSpecifically we can see the memory limits by piping the output to the grep command and searching for the keyword memory:

ulimit -a | grep memoryThe output is:

max locked memory (kbytes, -l) 64 max memory size (kbytes, -m) 20000000 virtual memory (kbytes, -v) 20000000Next we can optionally run Jupyter using tmux so that is can run in the background, and not be terminated if you close terminal. Tmux is a very popular terminal multiplexer. What is a terminal multiplexer? It lets you switch easily between several programs in one terminal, detach them (they keep running in the background) and reattach them to a different terminal. This guide outlines how to install and use tmux, and this guide outlines how to configure tmux. We can how install tmux:

sudo apt-get update && sudo apt-get -y install tmuxYou automatically login a tmux session to your default shell with your user account using the following command:

tmuxThe default binding key to send commands to tmux is holding Ctrl and hitting b (Ctrl-b) You can detach by hitting

Ctrl-b dYou can reconnect by running the following command:

tmux attachThe two guides above outline more advanced things you can do with tmux such as creating multiple windows or panes. Once we have set the limits for the memory and are optionally running tmux, we can we can now run Jupyter notebook using the following command:

nice jupyter notebookWe use the nice command to lower the priority of the code running in Jupyter. This is important since if you are running very CPU intensive code, it will run at a lower priority. This means that not only will it not disrupt others using the server (if it is a shared resource) but it will also ensure that the intensive code has a lower priority than important system functions.

When you run Jupyter notebook, it runs on a specific port number. The first notebook you are running will run generally on port 8888 so you can simply navigate to localhost:8888 to connect to Jupyter notebook.

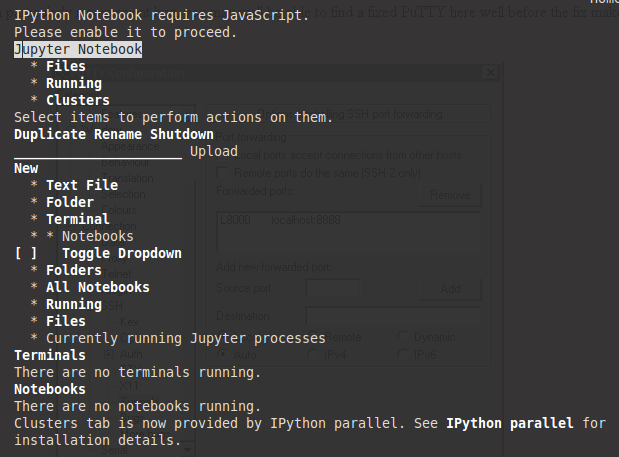

If you are running Jupyter on a a server without a web browser, it will still run but give you an error stating that Ipython notebook requires JavaScipt as shown below. This is expected since JavaScript is not necessary on a server without a web browser. You can type q and then enter on the keyboard and confirm by to closing w3m by hitting y and then enter which will remove the error message (and show you which port Jupyter is running on).

Since you are using tmux you can create a new pane or a new window and run the following command to monitor the system (and Jupyter):

htopIf you are not using tmux, you can open another ssh session to the server the run htop. Using htop, we can move around using the arrow keys, sort the processes by hitting F6, change the priority level using F8 and kill processes using F9. If you are using Mac and following these instructions on your local machine, you will need to run it using a super user (run

sudo htop). In the next section We will learn how to connect to the web browser and use Jupyter as if it was running on your local computer.Since Jupyter was run using nice, all python scripts run in Jupyter will appear in blue on htop indicating that they are running at a lower priority (compared to green and red).

Connecting to the server using SSH Tunneling

In this section we will learn how to connect to Jupyter notebook using SSH tunneling. While SSH tunneling might sound scary, the concept is very simple. Since Juptyer notebook is running on a specific port such as 8888, 8889 etc, SSH tunneling enables you to connect to the server’s port securely. Since we are used to running Jupyter notebook on a specific port number, we can create a secure tunnel which listens on the the server’s port (8888 for example) and securely forwards a port on our local computer on a specific port (8000 for example).

Mac or Linux

If using Linux of Mac, this can be done simply by running the following SSH command:

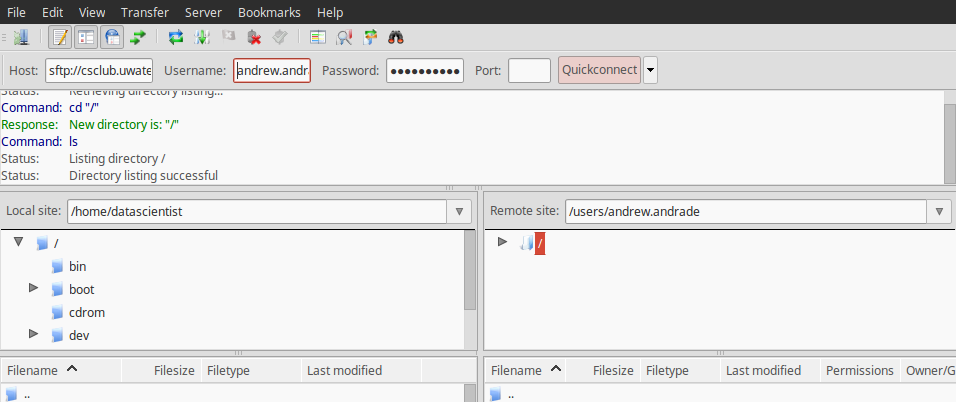

ssh -L 8888:localhost:8000 server_username@<server ip address>You should change 8888 to the port which Jupyter notebook is running on, and optionally change port 8000 to one of your choosing (if 8000 is used by another process). Your server_username is your username on the server and the

<server ip address>could be an actual IP address or a URL depending on who is providing your virtual machine. In the csclub example, the server_username is your csclub username (generally your quest ID) and the server ip would be for example corn-syrup.uwaterloo.ca. The command I would run if my username is andrew.andrade is:ssh -L 8888:localhost:8000 andrew.andrade@corn-syrup.uwaterloo.caIf no error shows up after running the

ssh -Lcommand and you tunneled the port to 8000 (for example), you can simply navigate to localhost:8000 in your favourite web browser to connect to Jupyer notebook running on the server!Windows

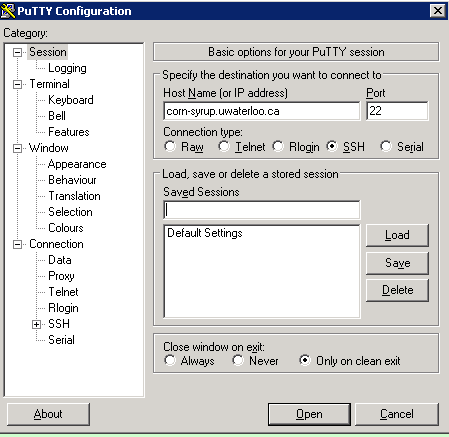

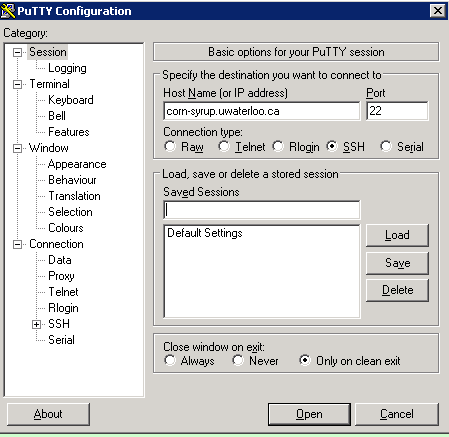

If you are using windows, you can also easily tunnel ssh using putty. As you may remember, you SSH into a server, you enter the server url into the host name as shown in the figure below (for connecting to corn-syrup from the uwaterloo csclub).

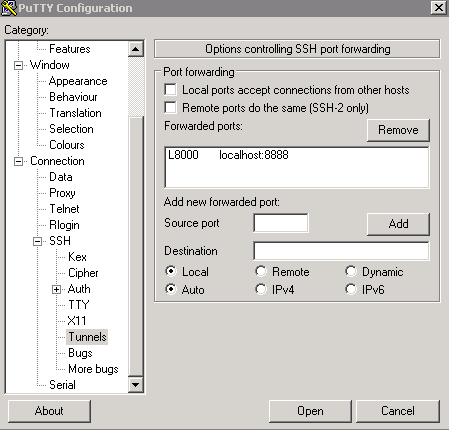

Next click the

+ SSHon the bottom left, and then click tunnels. You can now enter the port you want to access Jupyter on your local machine (for example 8000), and set the destination aslocalhost:8888where 8888 is the number of the port that Jupyter notebook is running on. Now click the add button and the ports should appear in the Forwarded ports: section.For the example of uWaterloo’s csclub, my Putty configuration screen looks like the following:

Finally you can hit open, and you will now both connect to the server via SSH and tunnel the desired ports. If no error shows up and you tunneled the port to 8000 (for example), you can simply navigate to localhost:8000 in our favourite web browser to connect to Jupyter notebook running on the server!

Using Jupyter notebook

Jupyter notebook will show all of the files and folders in the directory it is run from. We can now create a new notebook file by click new > python2 from the top right. This will open a notebook. We can now run python code in the cell or change the cell to markdown (by Cell > Cell Type > Markdown) and write notes and even put equations in by putting them between the

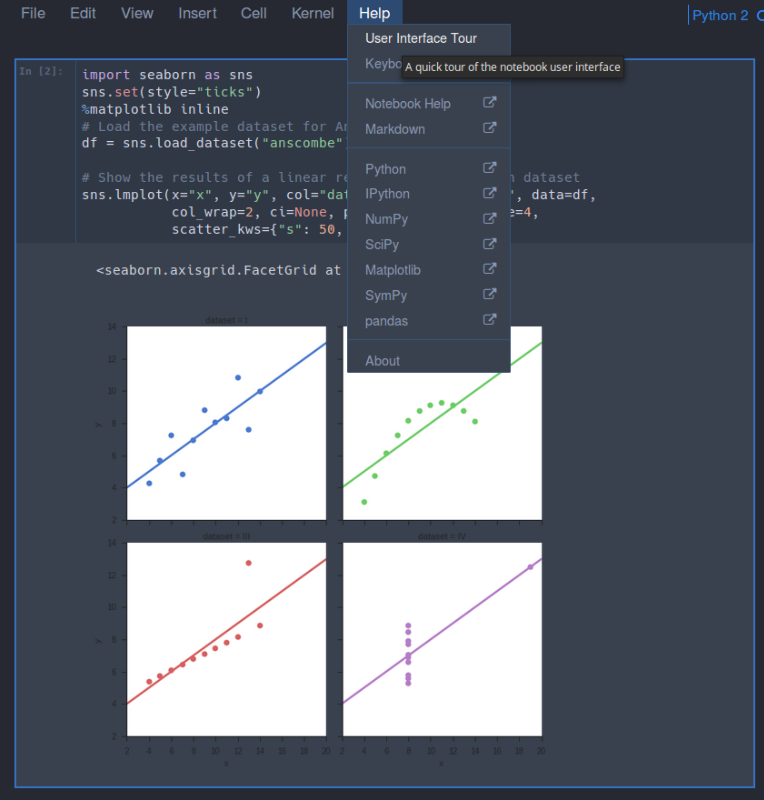

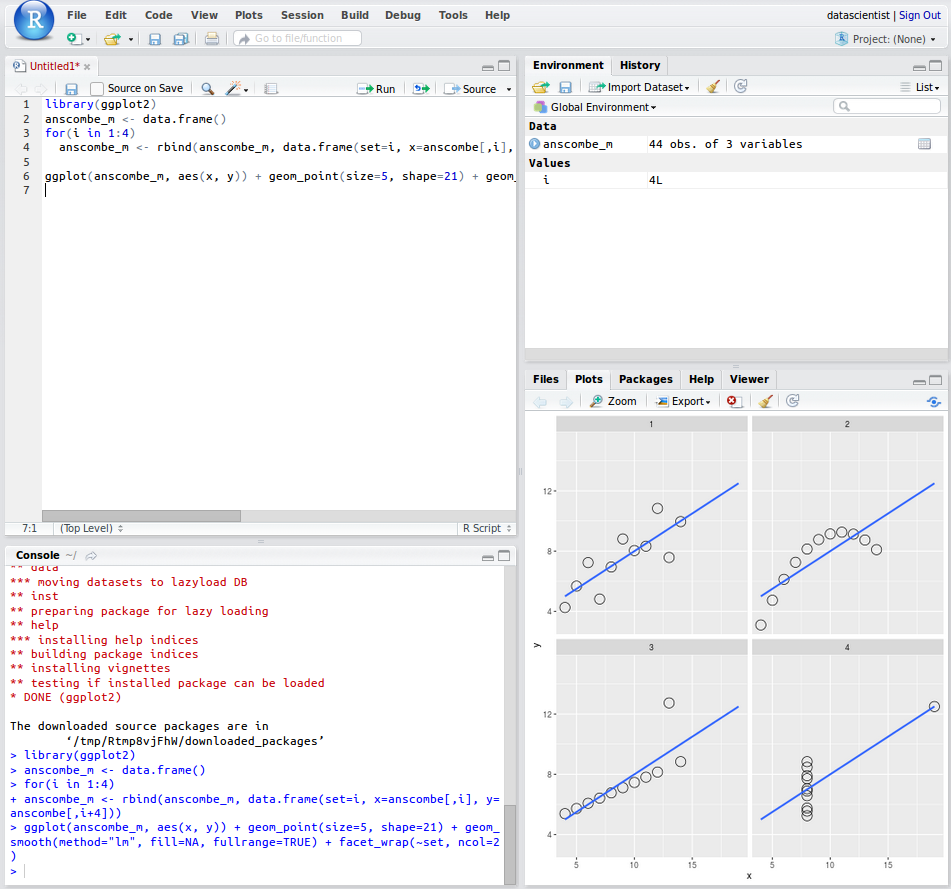

$$symbols. For example, to generate you can write the following$$ y = x^2$$You can also download Jupyter Notebooks off the internet and open them in Jupyer. For now let us make a simple plot from the Anscombe’s quartet using the seaborn library. We can do this by just typing the following into the first cell

import seaborn as sns sns.set(style="ticks") %matplotlib inline # Load the example dataset for Anscombe's quartet df = sns.load_dataset("anscombe") # Show the results of a linear regression within each dataset sns.lmplot(x="x", y="y", col="dataset", hue="dataset", data=df, col_wrap=2, ci=None, palette="muted", size=4, scatter_kws={"s": 50, "alpha": 1})Please note we use

%matplotlib inlinefor the plots to appear inline in the notebook (instead of a pop-up). When using Jupyter Notebook, the easiest way to see a plot is in-line.To get a quick tour of Jupyter notebook, you can go to Help > User Interface Tour as show below:

Once you have installed everything and Jupyter is running you can move onto to installing R and RStudio by following the Absolute Beginners Tutorial for Getting Started Doing Data Science using Servers.

-

Hands on Hadoop tutorial

This tutorial was originally created by Darrell Aucoin for the Stats Club. Follow along with the orginal and additional files here.

In pioneer days they used oxen for heavy pulling, and when one ox couldn’t budge a log, they didn’t try to grow a larger ox. We shouldn’t be trying for bigger computers, but for more systems of computers. —Grace Hoppe

Motivation

Q: Why do we need Hadoop when we have SQL?

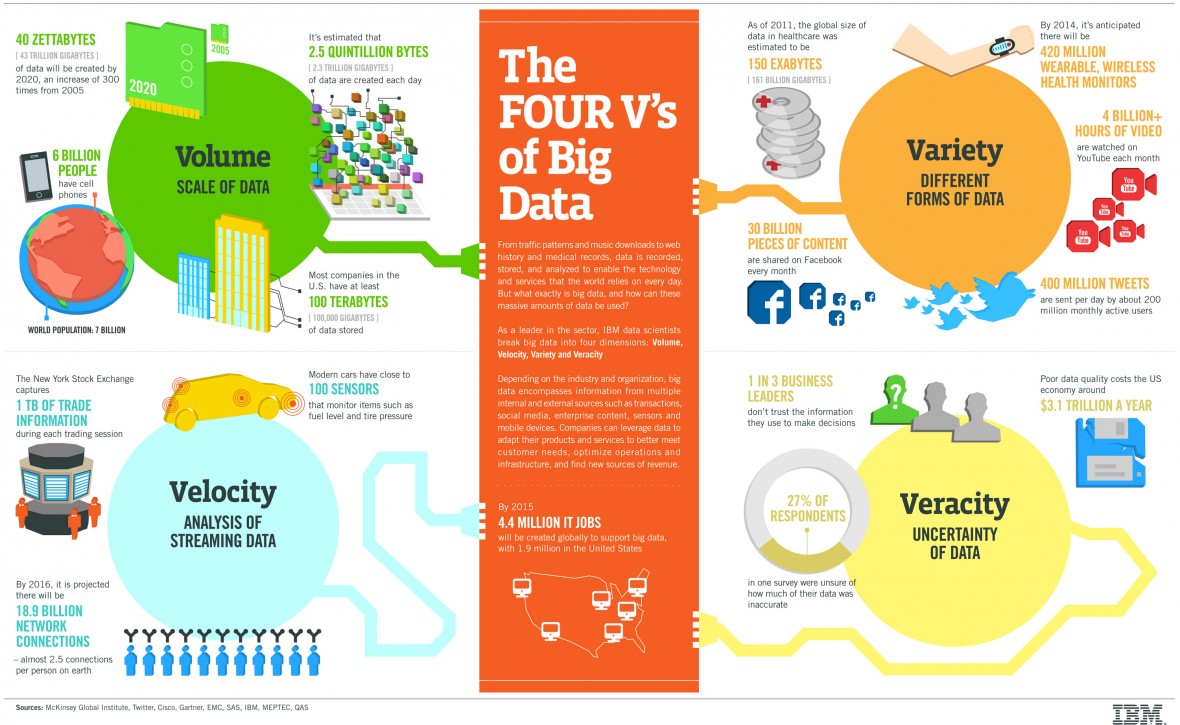

Our capability of storing data has outpaced our ability to read it

Harddrives speeds have not improved as fast as their capacity

Processing capacity have bottlenecked, increasing the need for parallelization of tasks

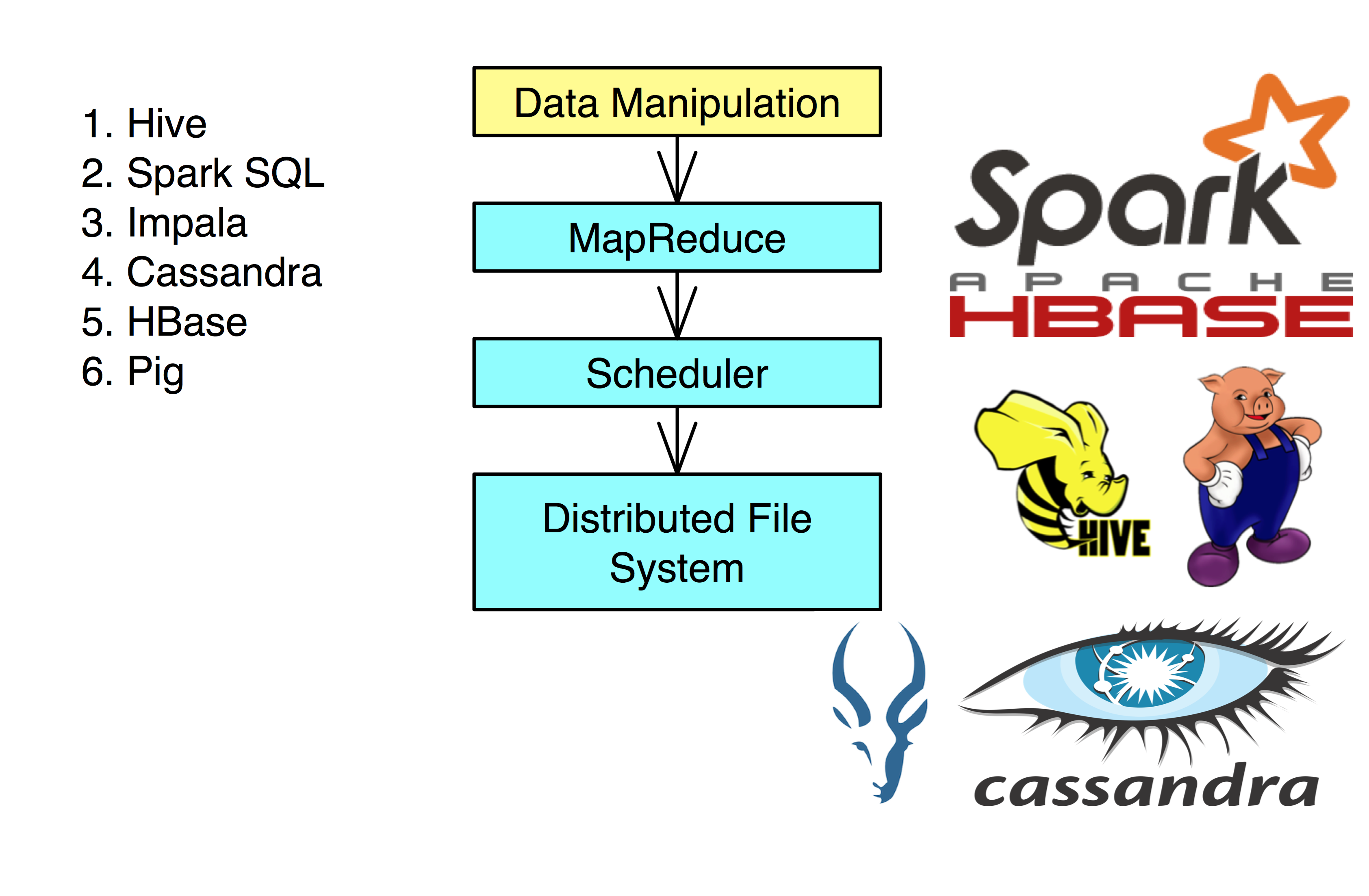

SQL servers do not scale very well in comparison to Hadoop SQL is best for Gigabytes of data, Hadoop for Terabyes/Petrabyes of data SQL skills are still useful in a Hadoop ecosystem (Hive, Spark SQL, Impala)

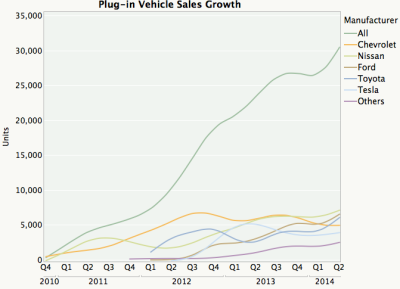

Q: Who uses Hadoop?

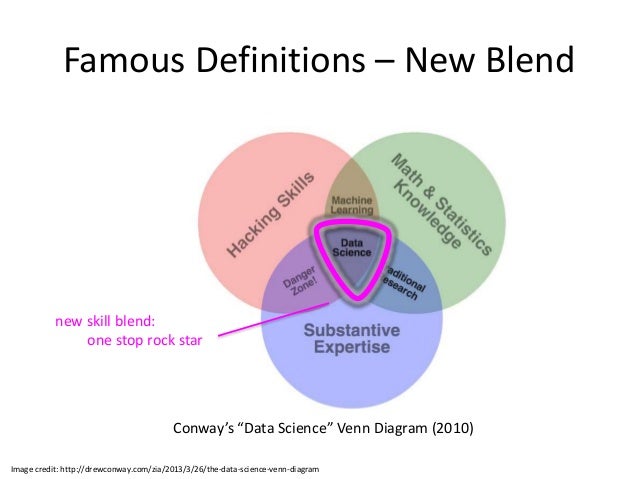

Q: Why learn Hadoop?

According to O’Reilly Data Science Survey,knowledge of Hadoop and related tools are correlated to the highest salaries in Data Science The highest salaries are correlated to joint knowledge of Hadoop and SQL

Hadoop vs RDBMS (SQL)

t SQL Hadoop Data Size Gigabytes Terabyte/Petrabytes Access Interactive and batch Batch Updates Read and write many times Write once, read many times Integrity High Low Scaling Nonlinear Linear Schema Write-on Schema Read-on Schema Data formating Normalized Optimally De-normalized Structure Static schema, highly structured Dynamic schema, Semi-structured When going from a RDBMS to Hadoop, the biggest trade off is the guanrantee of atomicity, consistency, isolation, and durability for scalability.

Write-on Schema: Information is inputted, transformed and written into the predefined schema: we can enforce consistency through this.

Read-on Schema: Bring in files without any predefined gatekeeping or consistency services. The schema is created when reading the files using a set of predefined rules. For instance, a comma seperated file could have it’s first entry interpretated as a string, the second as an integer, the third as a float, etc.

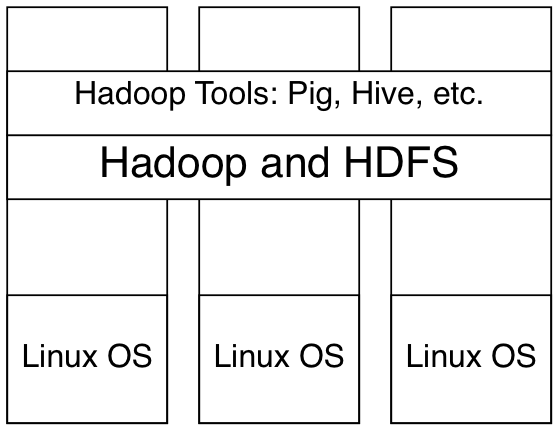

What is Hadoop?

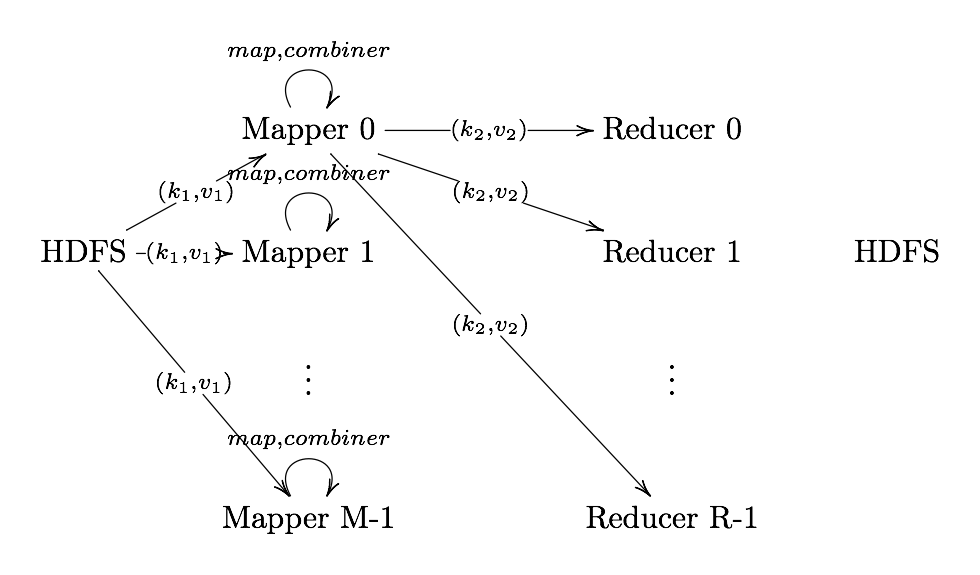

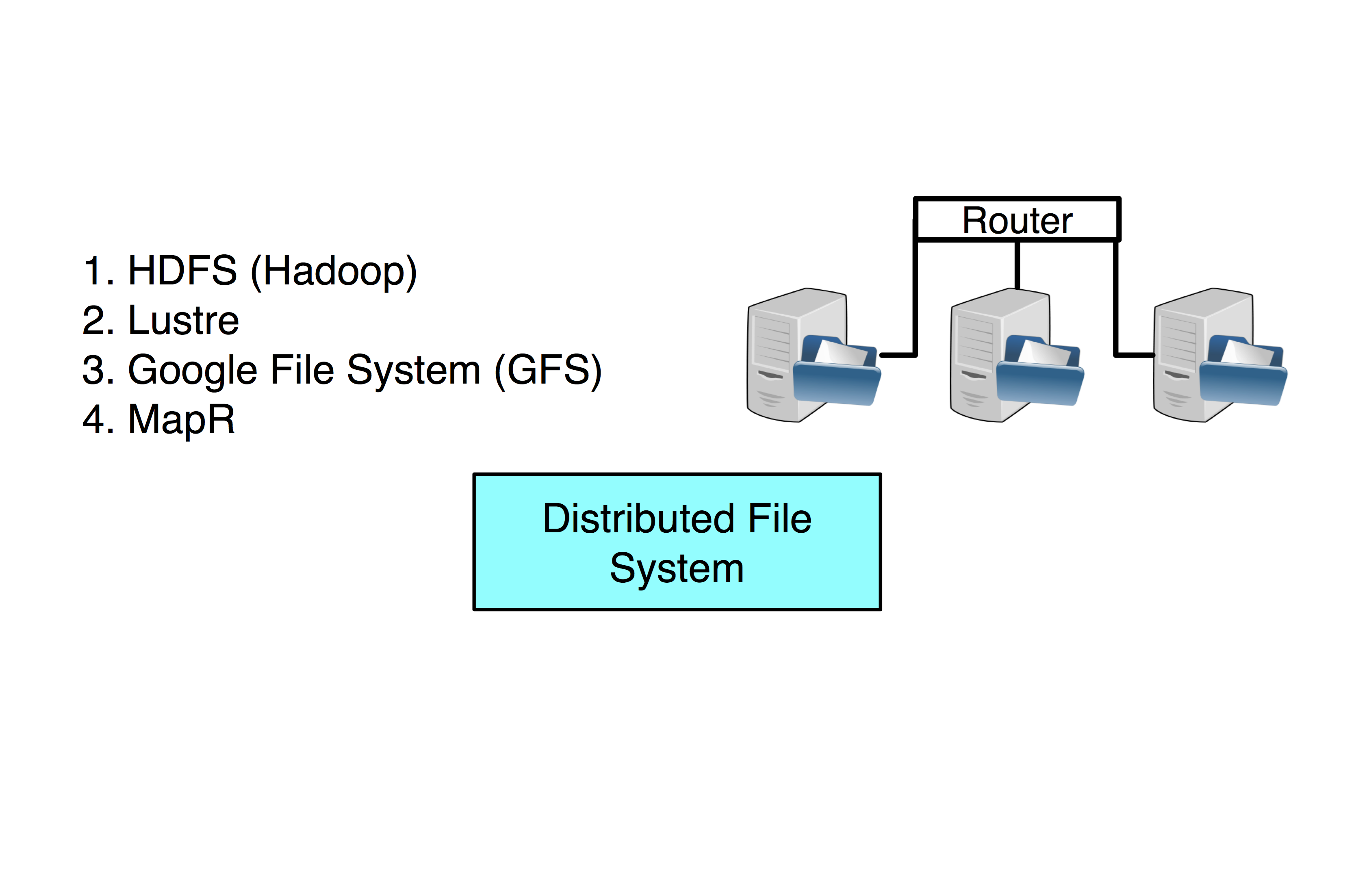

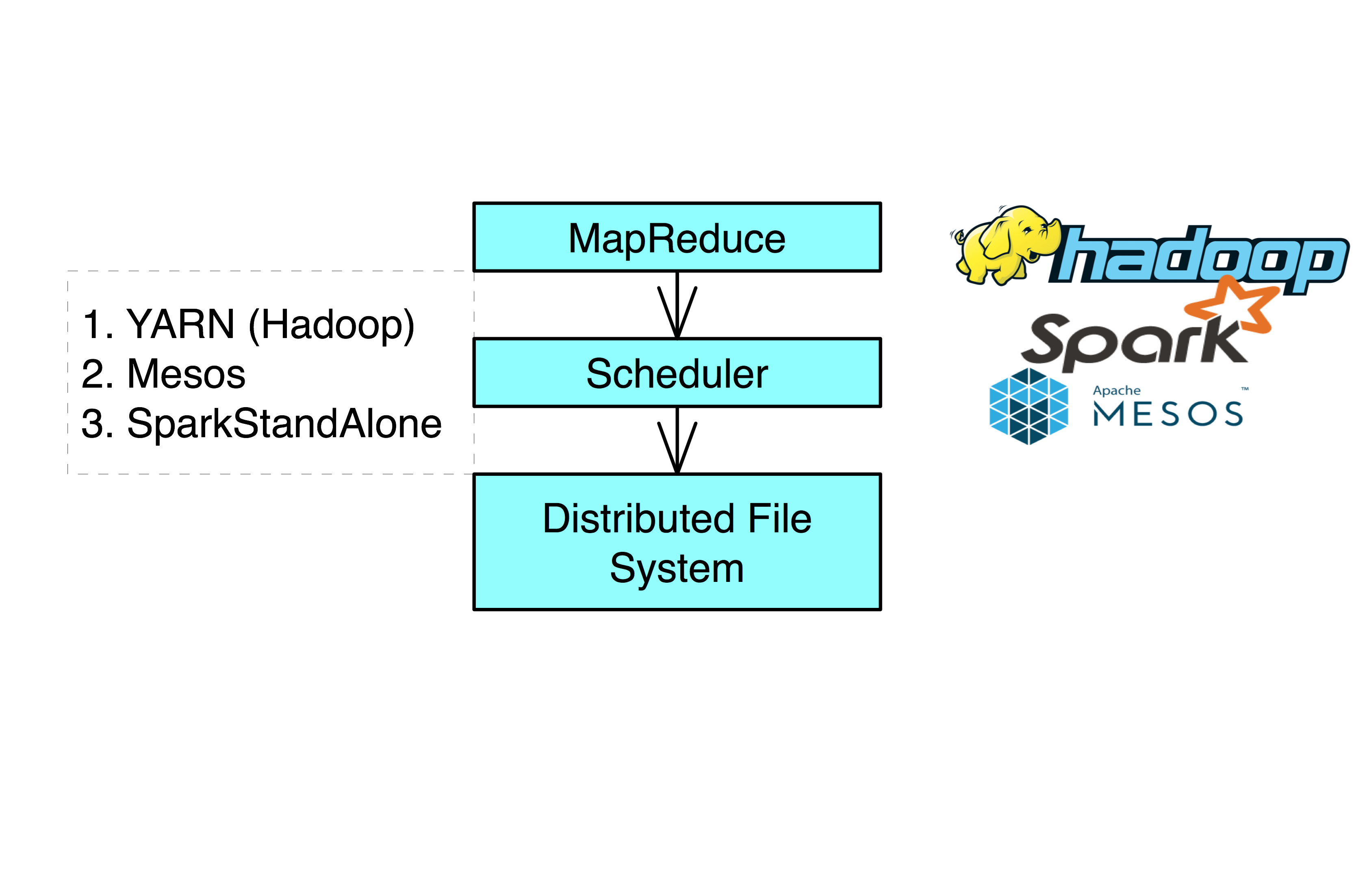

Definition. Hadoop is a MapReduce framework built on top of a distributed file system (HDFS for Hadoop).

Hadoop is distributed system, meaning it’s an interconnected system of computers that looks to the user as just one single entity.

When a client submits a MapReduce Job, the job is split into several tasks and the nodes takes on a fixed number of tasks per node Written in mainly in Java, with some components written in C and bash Designed around processing high throughput of large data rather than response times Hadoop is highly configurable, both in it’s operations and job configurations

Note:

All components and modules have to be installed on every node in a cluster (i.e. to use a module in R, the module has to be installed on every node)

A single node may be running several tasks from several different jobs

Hadoop V1.x and V2.x

Due to compatiability breaking problems with V1.x, V2.x was created: 2.x allows Hadoop Streaming to stream binary data compared to just text with 1.x 2.x is more scalable, 1.x has a bottleneck in it’s design (a singular NameNode) Various Hadoop configuration options changed names

What is MapReduce?

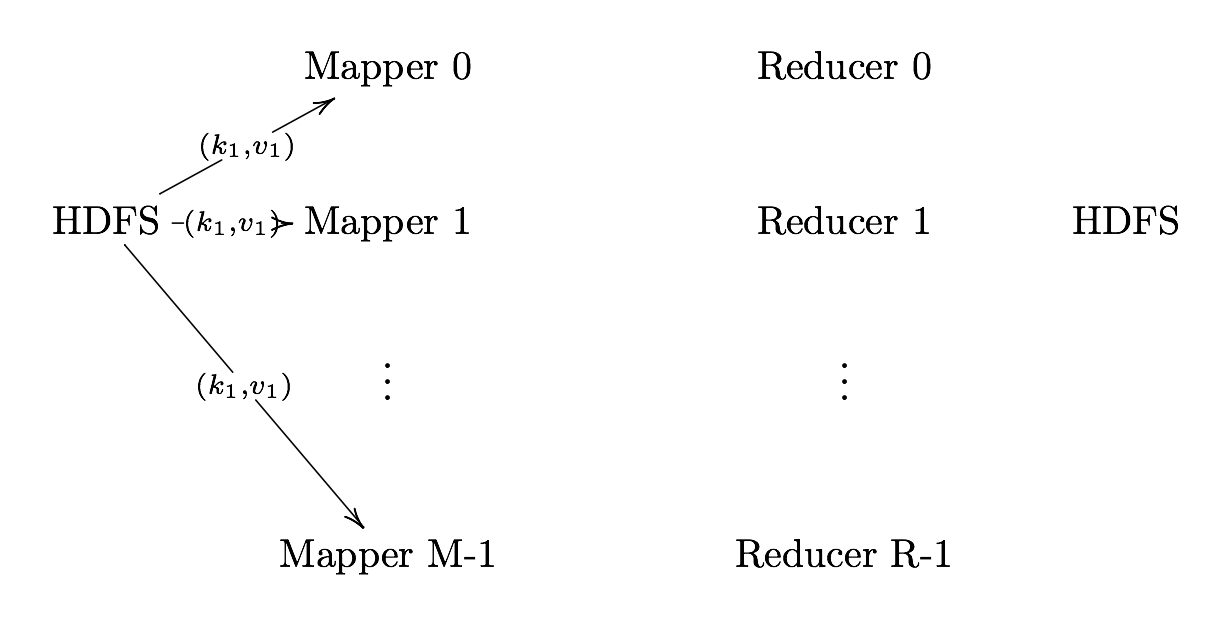

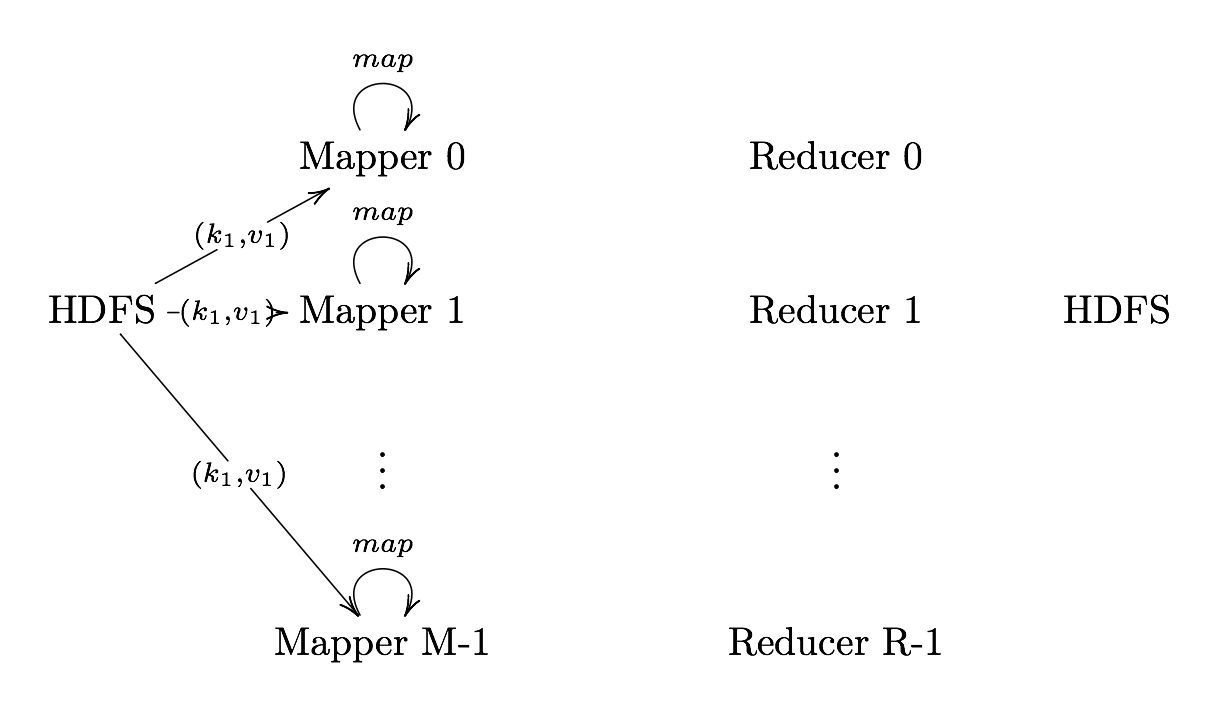

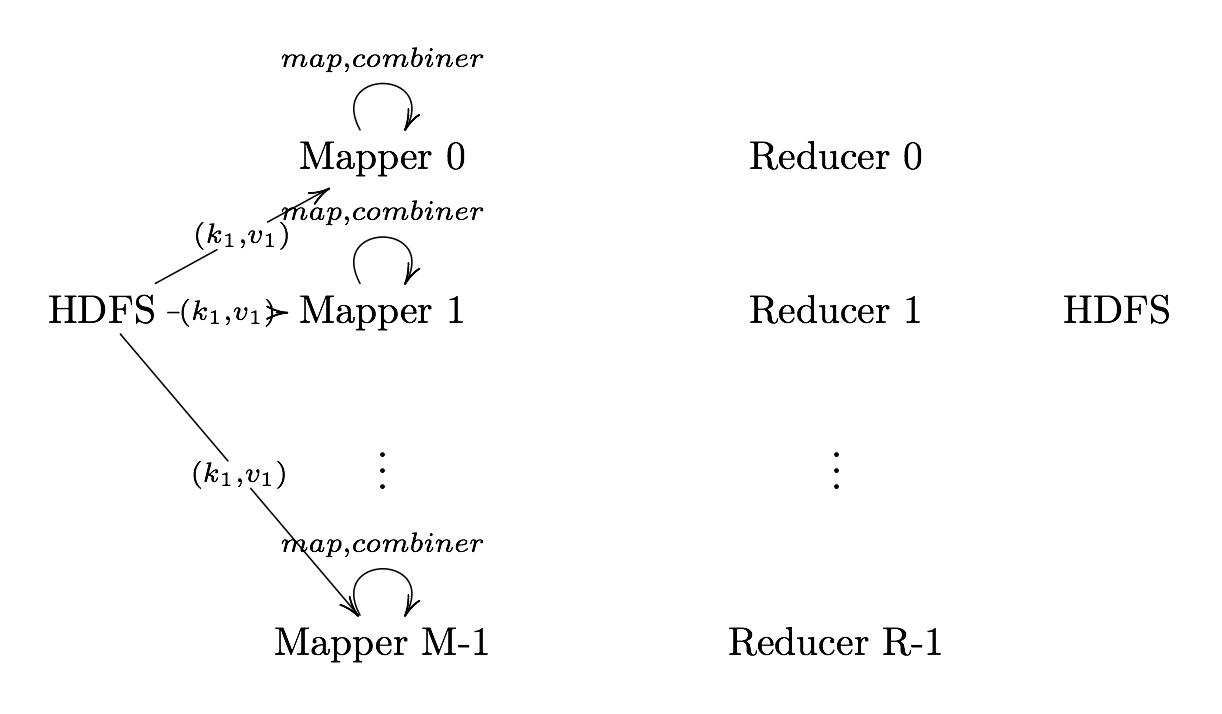

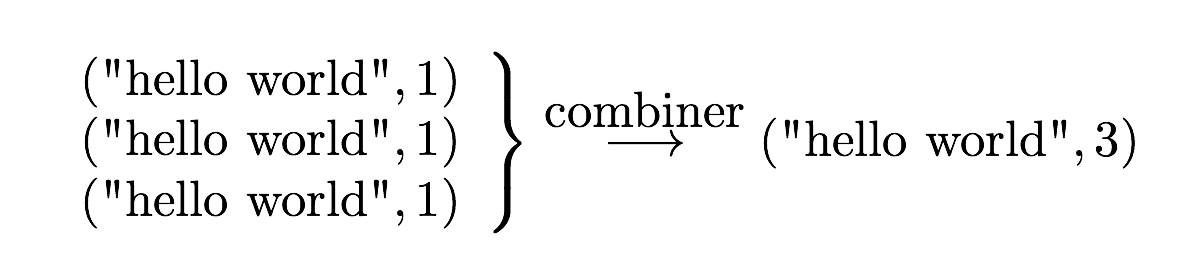

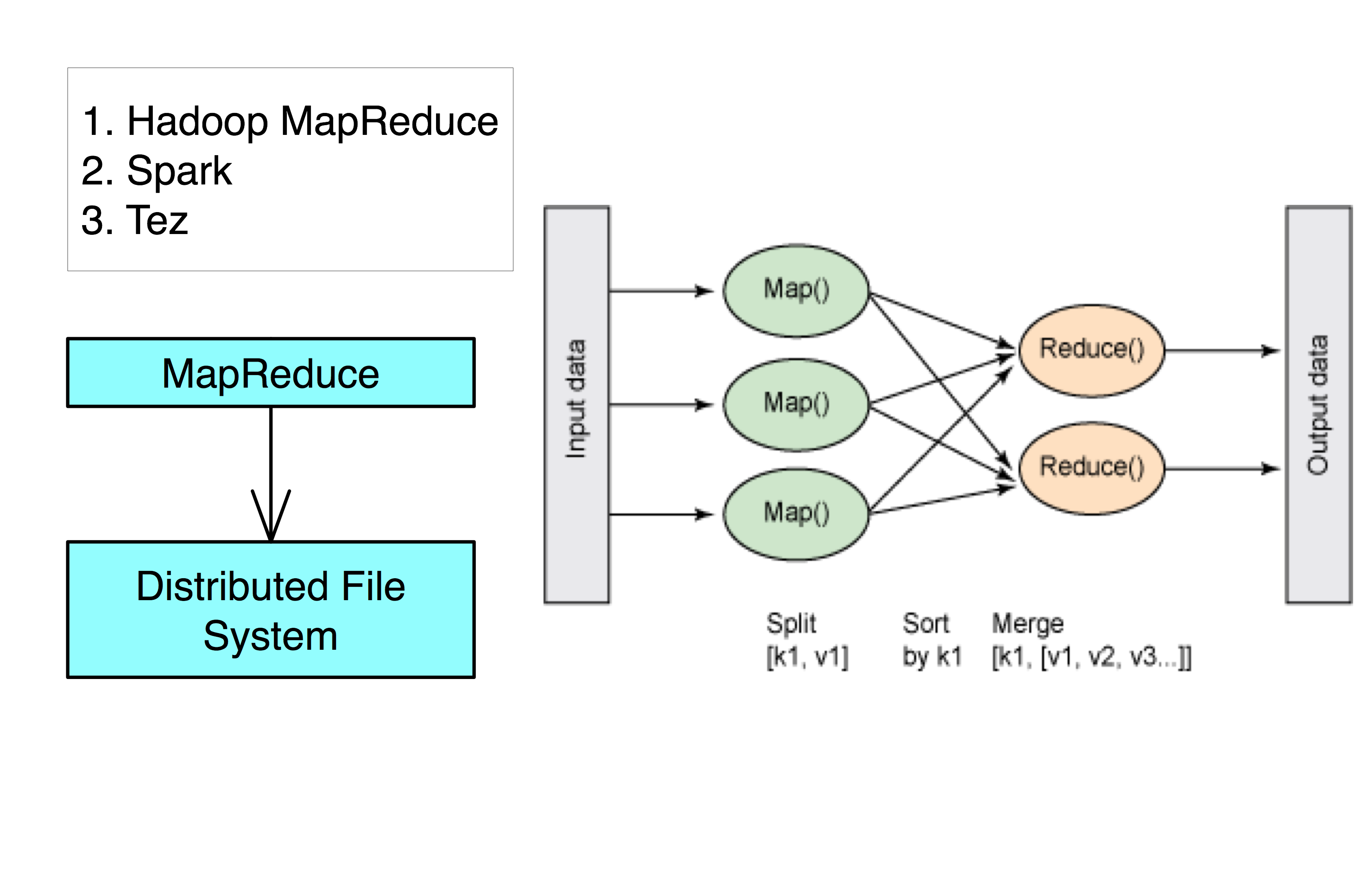

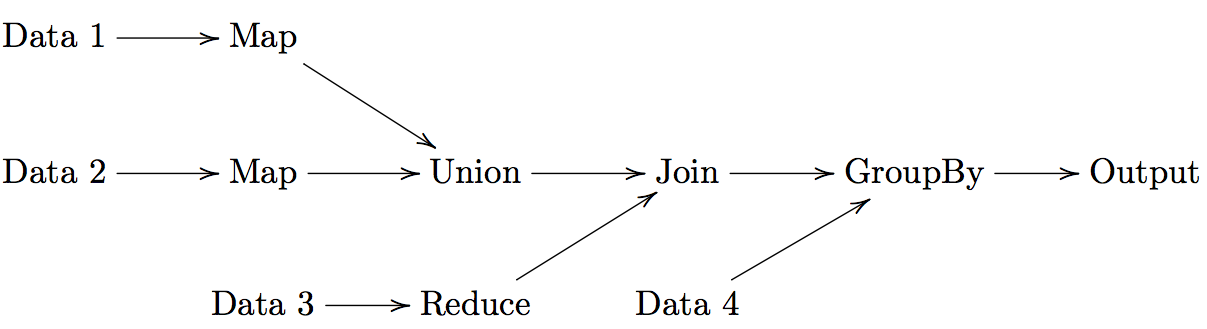

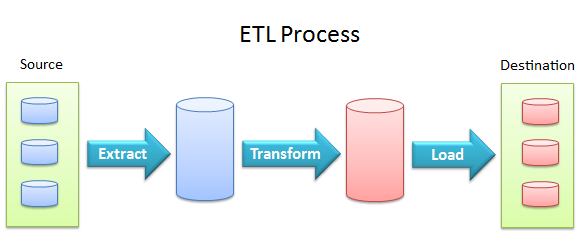

Definition. MapReduce is a programming paradigm model of using parallel, distributed algorithims to process or generate data sets. MapRedeuce is composed of two main functions:

Map(k,v): Filters and sorts data.

Reduce(k,v): Aggregates data according to keys (k).

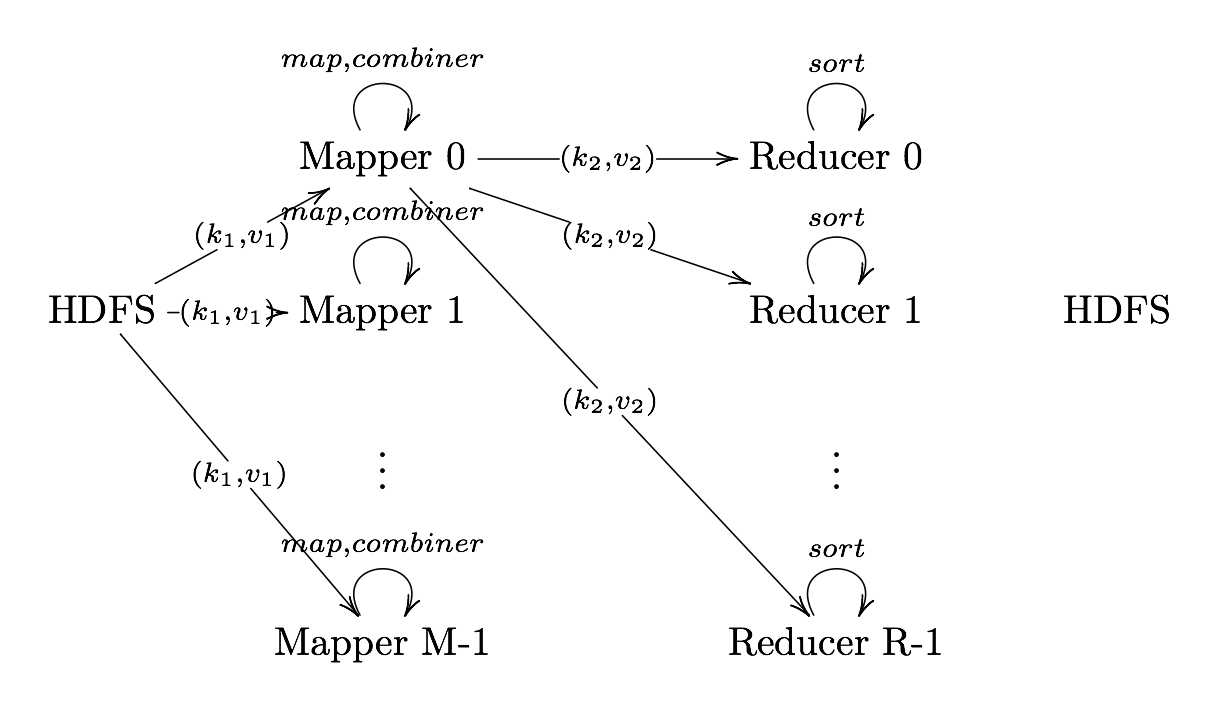

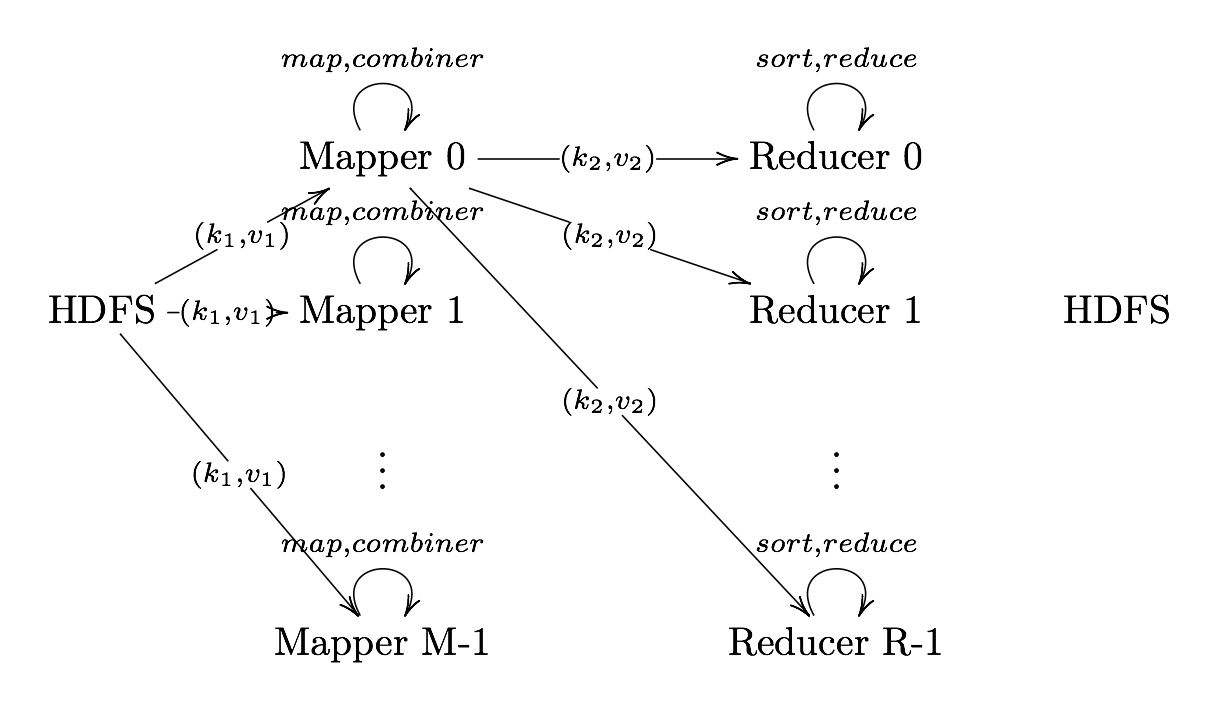

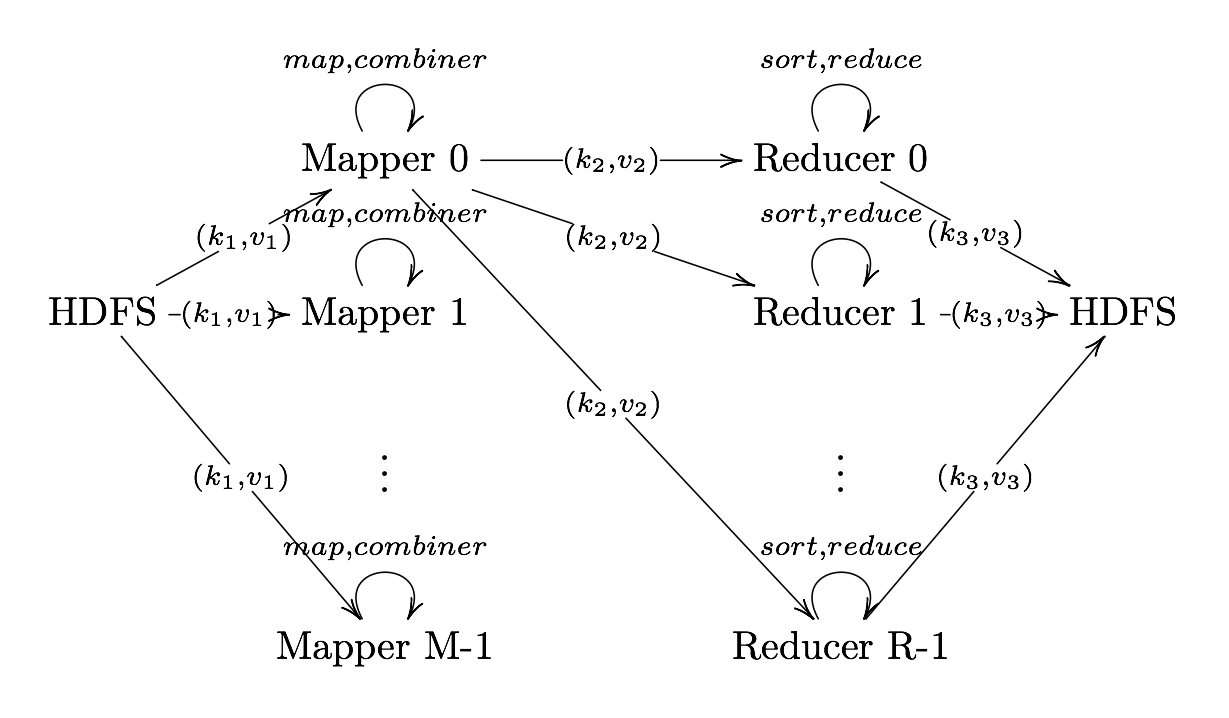

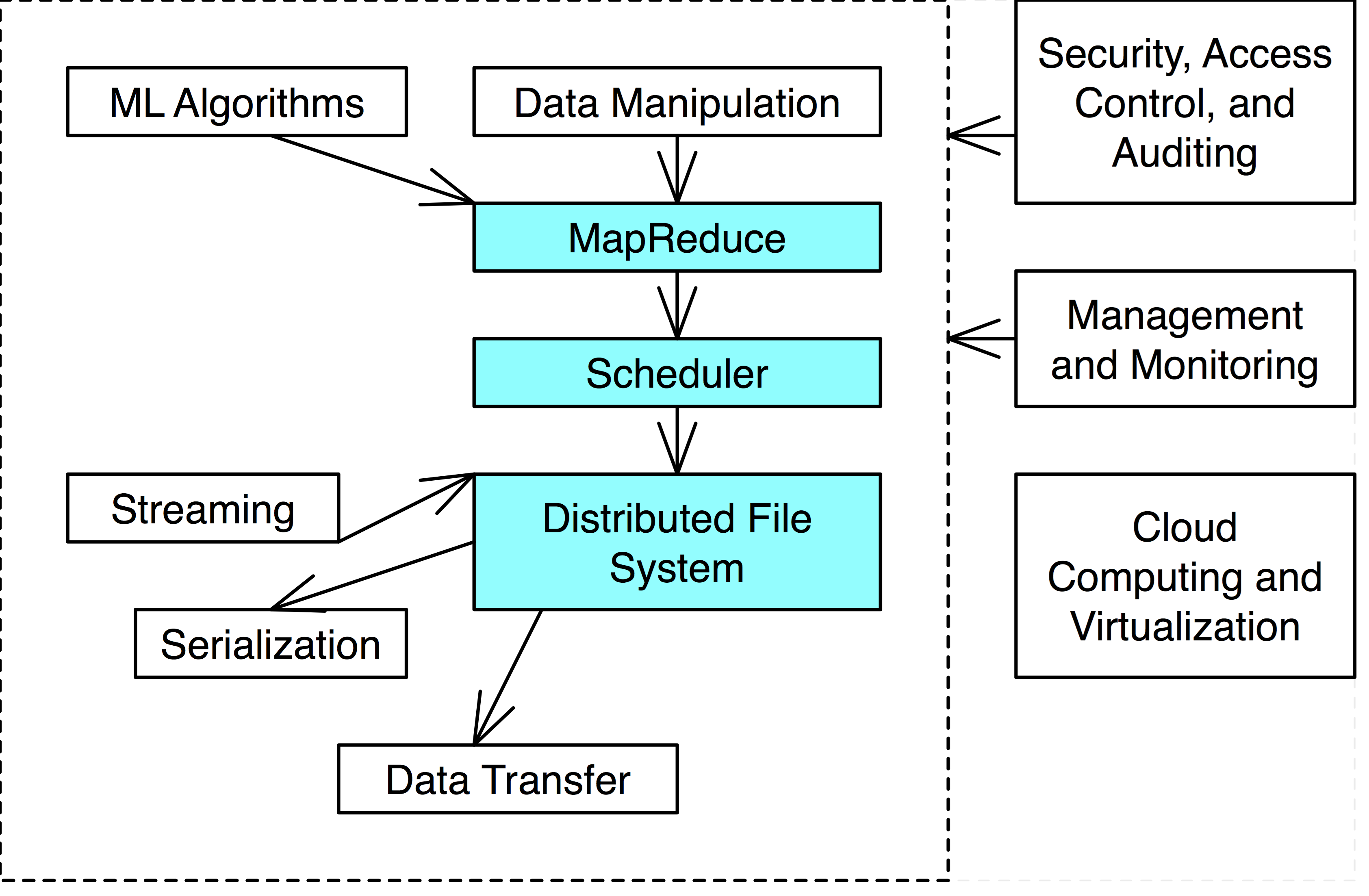

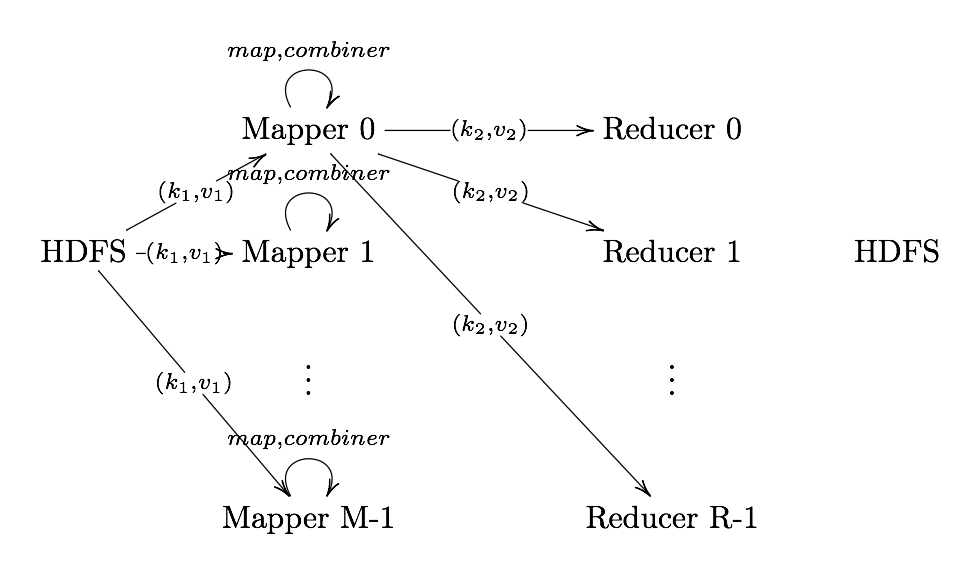

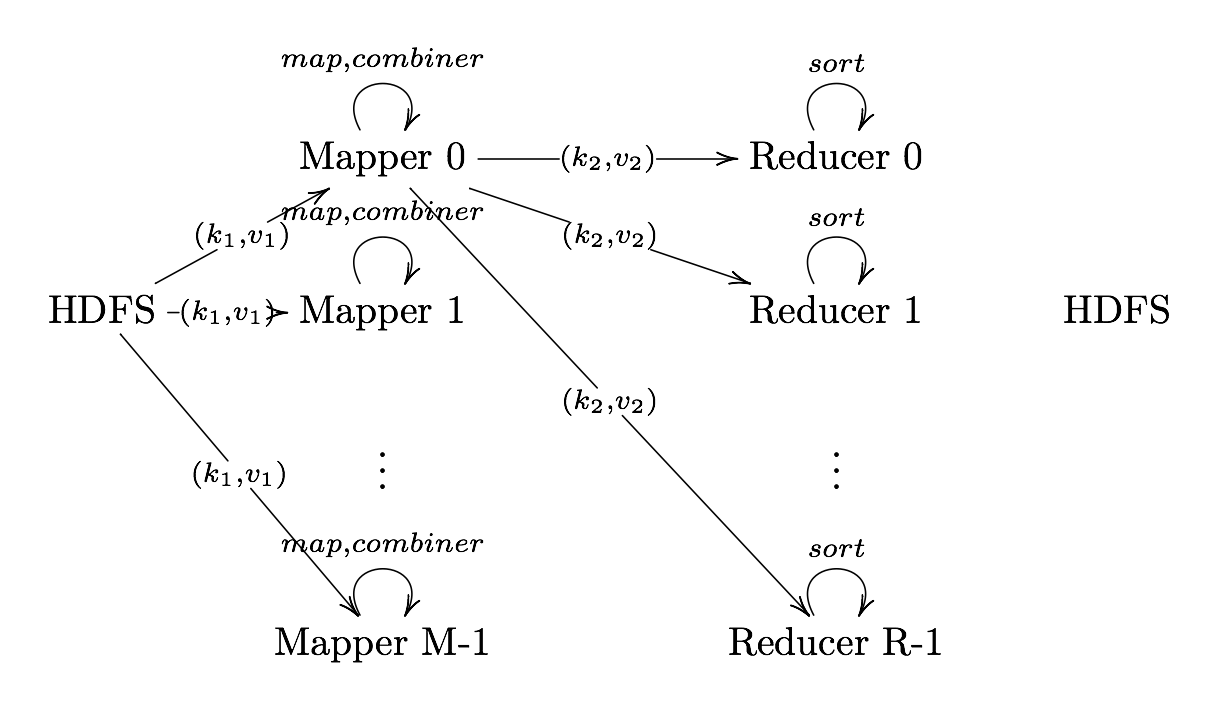

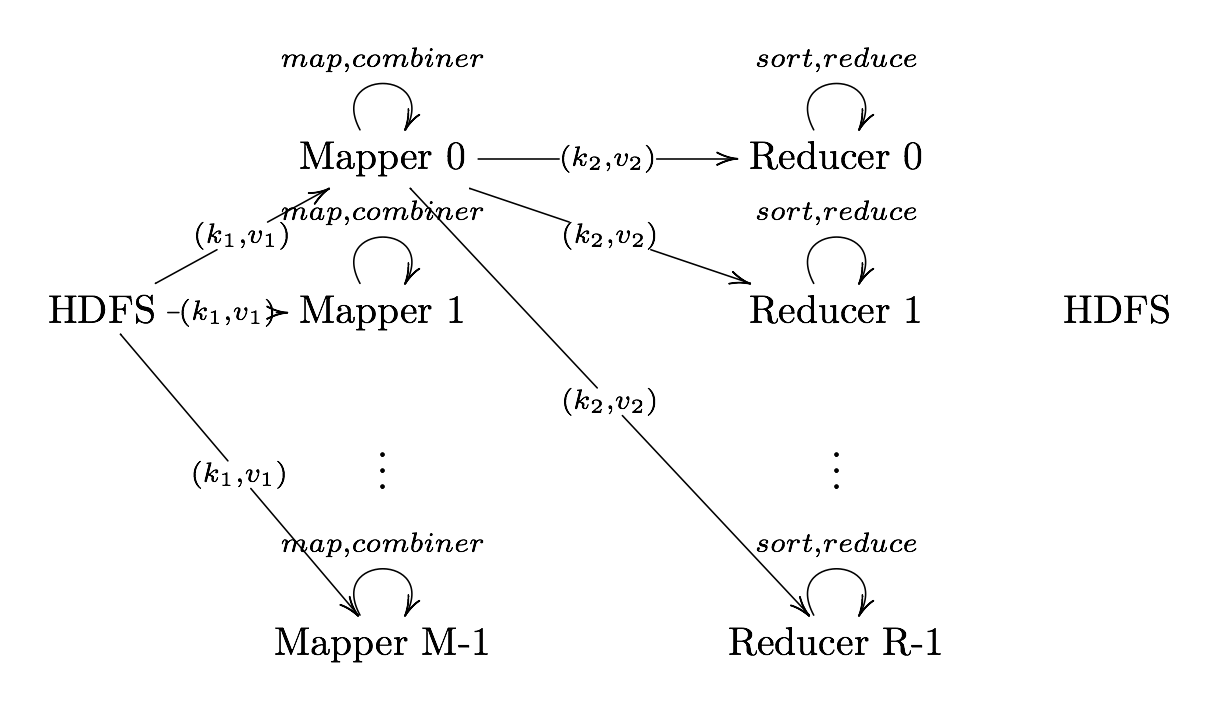

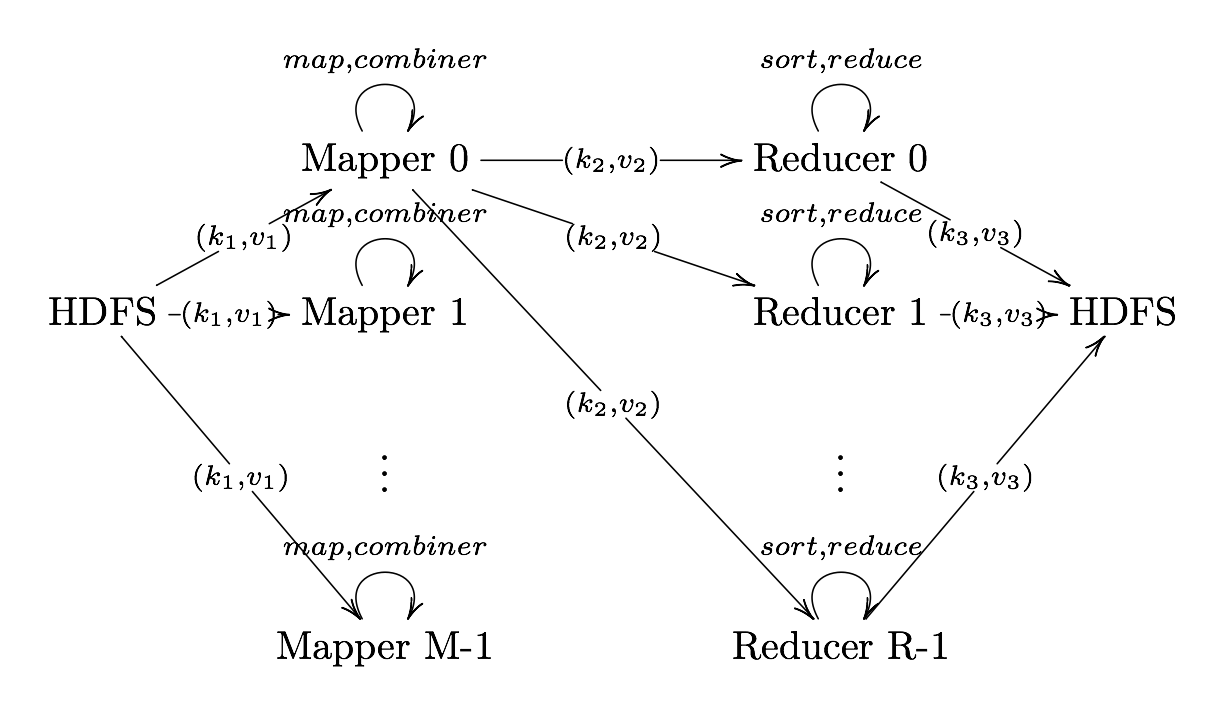

Hadoop MapReduce Components